| AI绘画专栏之 SDXL controlnet API教程(36) | 您所在的位置:网站首页 › sd的controlnet › AI绘画专栏之 SDXL controlnet API教程(36) |

AI绘画专栏之 SDXL controlnet API教程(36)

|

🔍 本来事业并无大小:大事小做,大事变成小事;小事大做,则小事变成大事。 —— 陶行知   hires.fix高清修复JSON采样方法记得改下{

"enable_hr": true,

"denoising_strength": 0.7,

"firstphase_width": 0,

"firstphase_height": 0,

"hr_scale": 2,

"hr_upscaler": "Latent",

"hr_second_pass_steps": 25,

"hr_resize_x": 0,

"hr_resize_y": 0,

"hr_sampler_name": "DPM++ 2M SDE Karras",

"hr_prompt": "",

"hr_negative_prompt": "",

"prompt": "((8k, best quality)),((Exquisite facial features)),((Anime style:1.2)),(1girl, full body,Holding a laser cannon),((looking at viewer,Standing posture)),((white pantyhose,JK Style,JK short skirt,white Football Baby Knee Socks,flashlight)),blue eyes,((white very_long_hair)),Cyberpunk, holographic aura, surreal science fiction art, future science fiction aesthetics, Han fu style clothing, clothing influenced by ancient Chinese operas,Space, Unreal, Ruins, Future Technology, Planets,masterpiece, best quality, masterpiece,best quality,ultra-detailed,very detailed illustrations,extremely detailed,intricate details,highres,super complex details,extremely detailed 8k cg wallpaper",

"styles": [

"string"

],

"seed": -1,

"subseed": -1,

"subseed_strength": 0,

"seed_resize_from_h": -1,

"seed_resize_from_w": -1,

"sampler_name": "DPM++ 2M SDE Karras",

"batch_size": 1,

"n_iter": 1,

"steps": 20,

"cfg_scale": 7,

"width": 512,

"height": 512,

"restore_faces": false,

"tiling": false,

"do_not_save_samples": false,

"do_not_save_grid": false,

"negative_prompt": "string",

"eta": 0,

"s_min_uncond": 0,

"s_churn": 0,

"s_tmax": 0,

"s_tmin": 0,

"s_noise": 1,

"override_settings": {},

"override_settings_restore_afterwards": true,

"script_args": [],

"sampler_index": "Euler a",

"script_name": "",

"send_images": true,

"save_images": true,

"alwayson_scripts": {}

} hires.fix高清修复JSON采样方法记得改下{

"enable_hr": true,

"denoising_strength": 0.7,

"firstphase_width": 0,

"firstphase_height": 0,

"hr_scale": 2,

"hr_upscaler": "Latent",

"hr_second_pass_steps": 25,

"hr_resize_x": 0,

"hr_resize_y": 0,

"hr_sampler_name": "DPM++ 2M SDE Karras",

"hr_prompt": "",

"hr_negative_prompt": "",

"prompt": "((8k, best quality)),((Exquisite facial features)),((Anime style:1.2)),(1girl, full body,Holding a laser cannon),((looking at viewer,Standing posture)),((white pantyhose,JK Style,JK short skirt,white Football Baby Knee Socks,flashlight)),blue eyes,((white very_long_hair)),Cyberpunk, holographic aura, surreal science fiction art, future science fiction aesthetics, Han fu style clothing, clothing influenced by ancient Chinese operas,Space, Unreal, Ruins, Future Technology, Planets,masterpiece, best quality, masterpiece,best quality,ultra-detailed,very detailed illustrations,extremely detailed,intricate details,highres,super complex details,extremely detailed 8k cg wallpaper",

"styles": [

"string"

],

"seed": -1,

"subseed": -1,

"subseed_strength": 0,

"seed_resize_from_h": -1,

"seed_resize_from_w": -1,

"sampler_name": "DPM++ 2M SDE Karras",

"batch_size": 1,

"n_iter": 1,

"steps": 20,

"cfg_scale": 7,

"width": 512,

"height": 512,

"restore_faces": false,

"tiling": false,

"do_not_save_samples": false,

"do_not_save_grid": false,

"negative_prompt": "string",

"eta": 0,

"s_min_uncond": 0,

"s_churn": 0,

"s_tmax": 0,

"s_tmin": 0,

"s_noise": 1,

"override_settings": {},

"override_settings_restore_afterwards": true,

"script_args": [],

"sampler_index": "Euler a",

"script_name": "",

"send_images": true,

"save_images": true,

"alwayson_scripts": {}

} Tileimport torch

from PIL import Image

from diffusers import ControlNetModel, DiffusionPipeline

from diffusers.utils import load_image

def resize_for_condition_image(input_image: Image, resolution: int):

input_image = input_image.convert("RGB")

W, H = input_image.size

k = float(resolution) / min(H, W)

H *= k

W *= k

H = int(round(H / 64.0)) * 64

W = int(round(W / 64.0)) * 64

img = input_image.resize((W, H), resample=Image.LANCZOS)

return img

controlnet = ControlNetModel.from_pretrained('lllyasviel/control_v11f1e_sd15_tile',

torch_dtype=torch.float16)

pipe = DiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5",

custom_pipeline="stable_diffusion_controlnet_img2img",

controlnet=controlnet,

torch_dtype=torch.float16).to('cuda')

pipe.enable_xformers_memory_efficient_attention()

source_image = load_image('https://huggingface.co/lllyasviel/control_v11f1e_sd15_tile/resolve/main/images/original.png')

condition_image = resize_for_condition_image(source_image, 1024)

image = pipe(prompt="best quality",

negative_prompt="blur, lowres, bad anatomy, bad hands, cropped, worst quality",

image=condition_image,

controlnet_conditioning_image=condition_image,

width=condition_image.size[0],

height=condition_image.size[1],

strength=1.0,

generator=torch.manual_seed(0),

num_inference_steps=32,

).images[0]

image.save('output.png')API获取图片信息 Tileimport torch

from PIL import Image

from diffusers import ControlNetModel, DiffusionPipeline

from diffusers.utils import load_image

def resize_for_condition_image(input_image: Image, resolution: int):

input_image = input_image.convert("RGB")

W, H = input_image.size

k = float(resolution) / min(H, W)

H *= k

W *= k

H = int(round(H / 64.0)) * 64

W = int(round(W / 64.0)) * 64

img = input_image.resize((W, H), resample=Image.LANCZOS)

return img

controlnet = ControlNetModel.from_pretrained('lllyasviel/control_v11f1e_sd15_tile',

torch_dtype=torch.float16)

pipe = DiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5",

custom_pipeline="stable_diffusion_controlnet_img2img",

controlnet=controlnet,

torch_dtype=torch.float16).to('cuda')

pipe.enable_xformers_memory_efficient_attention()

source_image = load_image('https://huggingface.co/lllyasviel/control_v11f1e_sd15_tile/resolve/main/images/original.png')

condition_image = resize_for_condition_image(source_image, 1024)

image = pipe(prompt="best quality",

negative_prompt="blur, lowres, bad anatomy, bad hands, cropped, worst quality",

image=condition_image,

controlnet_conditioning_image=condition_image,

width=condition_image.size[0],

height=condition_image.size[1],

strength=1.0,

generator=torch.manual_seed(0),

num_inference_steps=32,

).images[0]

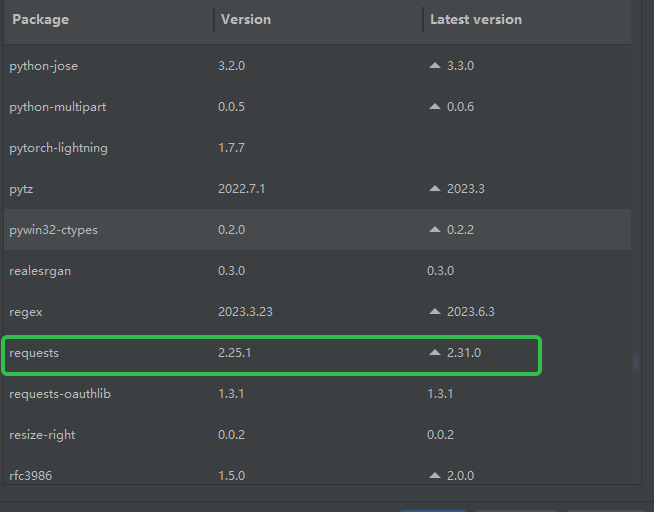

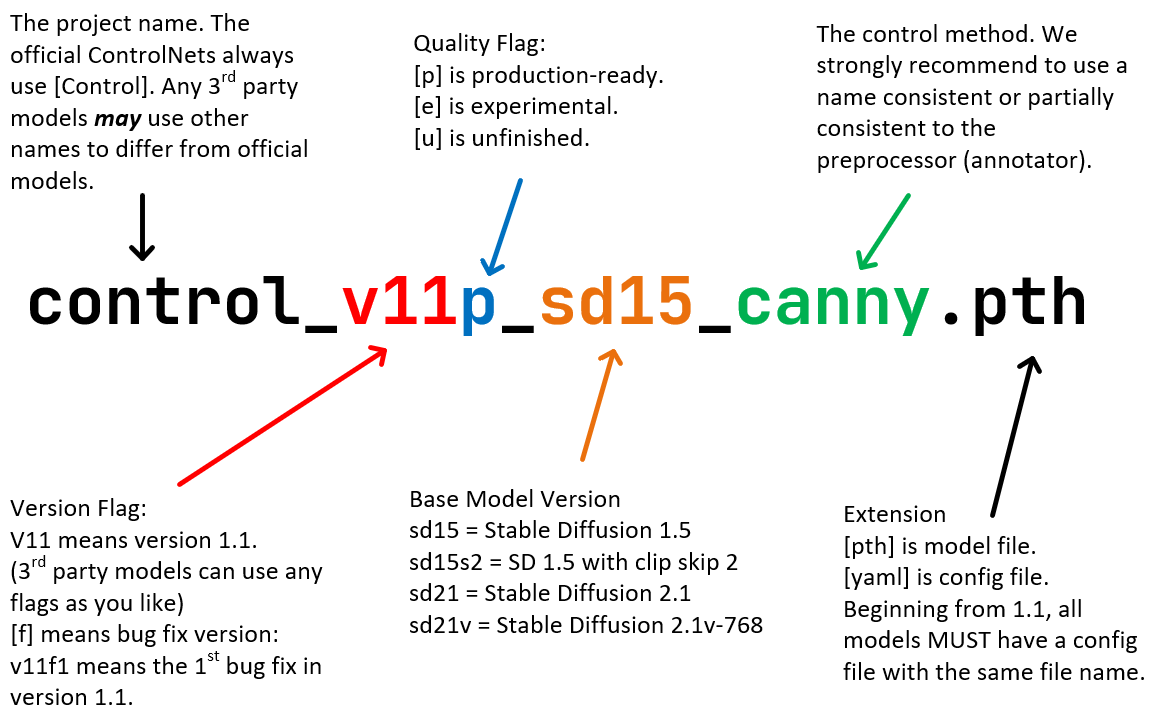

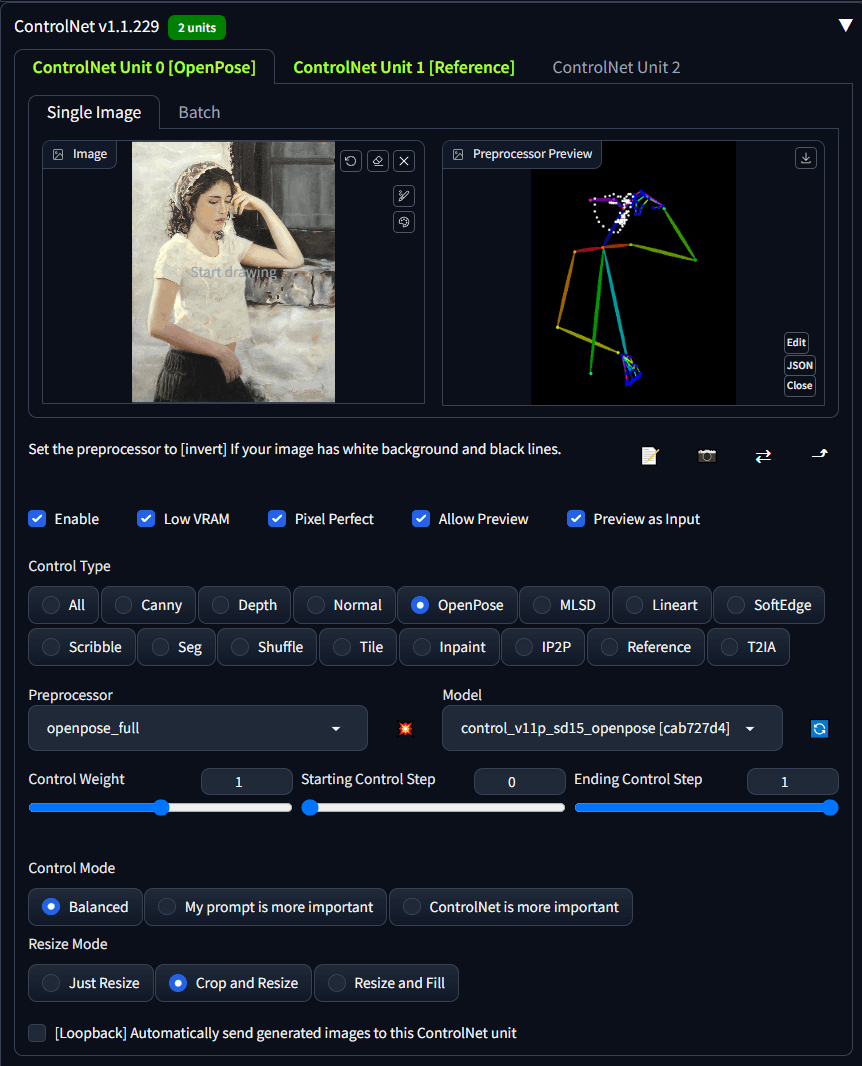

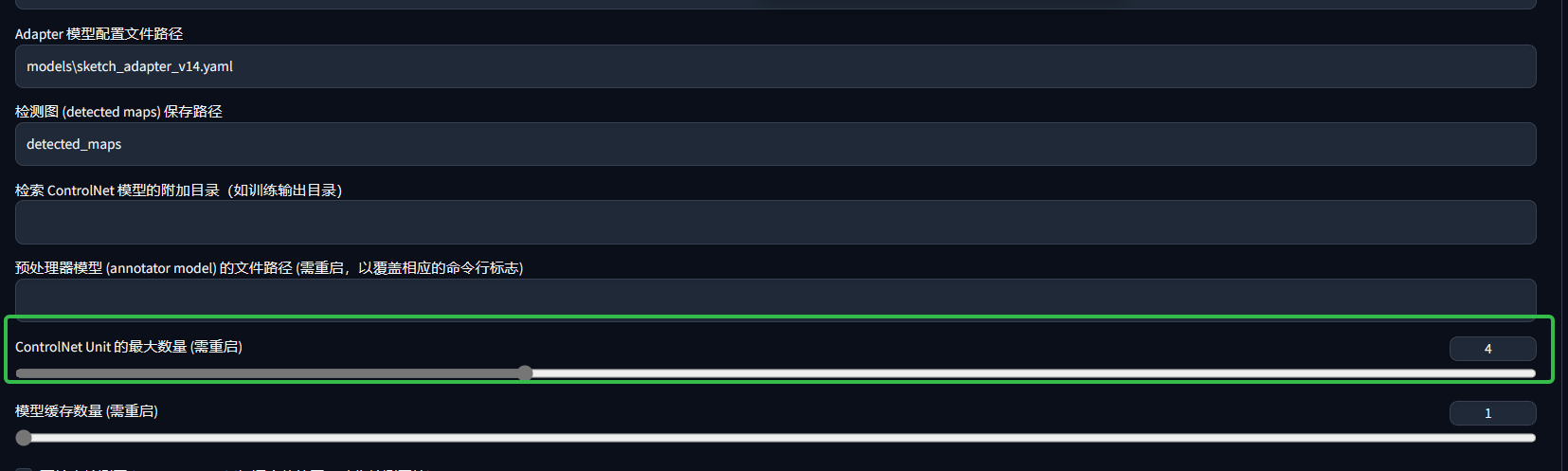

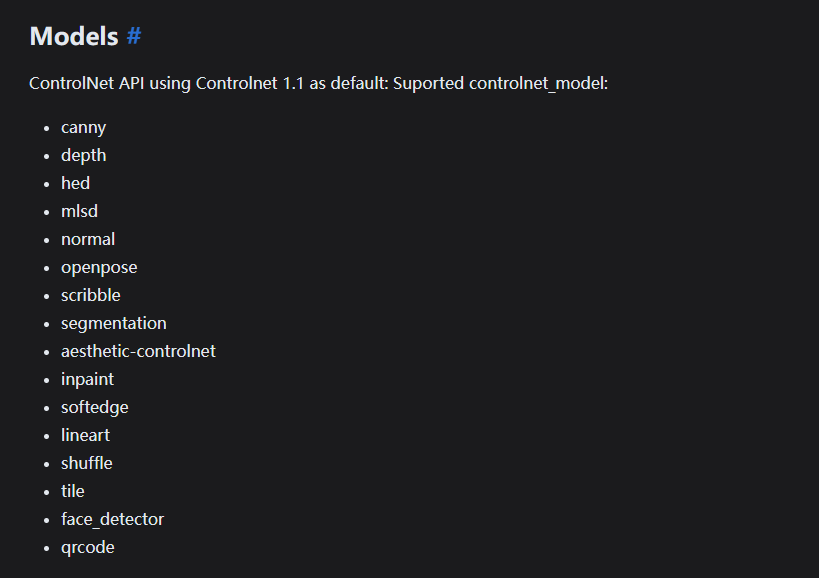

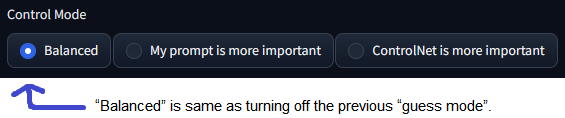

image.save('output.png')API获取图片信息获取图片信息 import base64 import requests def png_to_base64(file_path): with open(file_path, "rb") as image_file: encoded_string = base64.b64encode(image_file.read()) return encoded_string # 图像Base64信息 image_base64 =png_to_base64(r'E:\sd-webui-aki\sd-webui-aki-v4\outputs\txt2img-images\11841-2502549950-(8k, original image, best quality, masterpiece_ 1.2),aerial garden, Leaning against a circular window of a house,(A girl upper_b.png') # API端点URL url = "http://127.0.0.1:7860/sdapi/v1/png-info" # 构造请求参数 payload = { "image": image_base64 } # 发送POST请求 response = requests.post(url, json=payload) # 打印请求响应 print(response.text)官方网站: https://huggingface.co/lllyasviel/ControlNet-v1-1/tree/main https://github.com/Mikubill/sd-webui-controlnet Control 控制 Net 网络   一、基本信息 一、基本信息目标产品 Contronet API 体验版本 v1.1.234 体验设备 Windows sd 体验时间 2023年7月30日08:50:33 二、产品信息产品类型:AI绘画 SD 插件产品定位:插件2. 产品关键功能renference_only(仅参考输入图) 保留原图人物细节,画风迁移 invert 简单上色 openpose(姿态提示) 姿态参考(人物) seg 色块提示(场景) shuffle 重新分布图片色彩(色彩) 使用色彩图素材 tile 增加细节 配合去除景深,使用精确背景去除工具得到人物蒙版,回送脚本 media_pipe face(脸部边缘检测) 用于生成表情 hed(边缘检测) 用于ai动画生成 canny(边缘检测) 轮廓提示 lineart(线稿提取) 轮廓提示 softedge(软边缘检测) 轮廓提示 depth 场景远近提示 三、安装从网址安装https://github.com/Mikubill/sd-webui-controlnet.git8.1.controlnet开多个tab窗口unit 1.打开设置  2.搜索或者下拉至controlnet,改变最大单元格,重启UI。  3.记得勾选启用才能使用,当启用后最新版会变成绿色  四、API 四、APIModel  td {white-space:nowrap;border:1px solid #dee0e3;font-size:10pt;font-style:normal;font-weight:normal;vertical-align:middle;word-break:normal;word-wrap:normal;} model_id The ID of the model to be used. It can be public or your trained model. controlnet_model ControlNet model ID. It can be from the models list or user trained. controlnet_type ControlNet model type. It can be from the models list. auto_hint 是否自动提示图像 guess_mode 默认为true,不传则控制选择最佳的model prompt 文本提示词 negative_prompt Items you don't want in the image init_image Link to the Initial Image control_image Link to the Controlnet Image mask_image Link to the mask image for inpainting width Max Height: Width: 1024x1024 height Max Height: Width: 1024x1024 samples Number of images to be returned in response. The maximum value is 4. scheduler Use it to set a scheduler. tomesd Enable tomesd to generate images: gives really fast results, default: yes, options: yes/no use_karras_sigmas Use keras sigmas to generate images. gives nice results, default: yes, options: yes/no vae use custom vae in generating images default: null lora_strength use different lora strengths default: null lora_model multi lora is supported, pass comma saparated values. Example contrast-fix,yae-miko-genshin num_inference_steps Number of denoising steps (minimum: 1; maximum: 50) safety_checker A checker for NSFW images. If such an image is detected, it will be replaced by a blank image. embeddings_model Use it to pass an embeddings model. enhance_prompt Enhance prompts for better results; default: yes, options: yes/no multi_lingual Use different language then english; default: yes, options: yes/no guidance_scale Scale for classifier-free guidance (minimum: 1; maximum: 20) controlnet_conditioning_scale Scale for controlnet guidance (minimum: 1; maximum: 20) strength Prompt strength when using init_image. 1.0 corresponds to full destruction of information in the init image. seed Seed is used to reproduce results, same seed will give you same image in return again. Pass null for a random number. webhook Set an URL to get a POST API call once the image generation is complete. track_id This ID is returned in the response to the webhook API call. This will be used to identify the webhook request. upscale Set this parameter to "yes" if you want to upscale the given image resolution two times (2x). If the requested resolution is 512 x 512 px, the generated image will be 1024 x 1024 px. clip_skip Clip Skip (minimum: 1; maximum: 8) base64 Get response as base64 string, pass init_image, mask_image , control_image as base64 string, to get base64 response. default: "no", options: yes/no temp Create temp image link. This link is valid for 24 hours. temp: yes, options: yes/no --request POST 'https://stablediffusionapi.com/api/v5/controlnet' \ localhost:7860 { "key": "", "controlnet_model": "canny", "controlnet_type" :"canny", "model_id": "midjourney", "auto_hint": "yes", "guess_mode" : "no", "prompt": "a model doing photoshoot, ultra high resolution, 4K image", "negative_prompt": null, "init_image": "https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/control_images/converted/control_human_openpose.png", "mask_image": null, "width": "512", "height": "512", "samples": "1", "scheduler": "UniPCMultistepScheduler", "num_inference_steps": "30", "safety_checker": "no", "enhance_prompt": "yes", "guidance_scale": 7.5, "strength": 0.55, "lora_model": "more_details", "clip_skip": "2", "tomesd": "yes", "use_karras_sigmas": "yes", "vae": null, "lora_strength": null, "embeddings_model": null, "seed": null, "webhook": null, "track_id": null }开启contronet参数设置 self.setup_controlnet_params(control_enabled, control_module, control_model, control_weight, control_image, control_mask, control_invert_image, control_resize_mode, control_rgbbgr_mode, control_lowvram, control_processor_res, control_threshold_a, control_threshold_b, control_guidance_start, control_guidance_end, control_guessmode) def get_checkpoint_model(self): return self.override_settings['sd_model_checkpoint'] if 'sd_model_checkpoint' in self.override_settings else None def setup_controlnet_params(self, enabled, module, model, weight, image, mask, invert_image, resize_mode, rgbbgr_mode, lowvram, processor_res, threshold_a, threshold_b, guidance_start, guidance_end, guessmode): controlnet_args = { "enabled": enabled,#是否开启 "module": module,#选择模式 "model": model,#选择模型 "weight": weight,权重 "image": image,上传图片 "mask": mask,蒙版 "invert_image": invert_image, "resize_mode": resize_mode, "rgbbgr_mode": rgbbgr_mode, "lowvram": lowvram, "processor_res": processor_res, "threshold_a": threshold_a, "threshold_b": threshold_b, "guidance_start": guidance_start, "guidance_end": guidance_end, "guessmode": guessmode } self.alwayson_scripts["ControlNet"] = { "args": controlnet_args } def get_controlnet_params(self): return self.alwayson_scripts["ControlNet"]["args"] if "ControlNet" in self.alwayson_scripts else ControlNet_Model() def custom_to_dict(self): res_dict = self.dict() init_image = utils.image_path_to_base64(self.init_images[0]) res_dict["init_images"][0] = init_image res_dict["mask"] = utils.image_path_to_base64(self.mask) controlnet_args:ControlNet_Model = self.get_controlnet_params() if controlnet_args: res_dict["alwayson_scripts"]["ControlNet"]["args"]["image"] = utils.image_path_to_base64(controlnet_args["image"]) res_dict["alwayson_scripts"]["ControlNet"]["args"]["mask"] = utils.image_path_to_base64(controlnet_args["mask"]) return res_dict、 Contronet模型class class ControlNet_Model(BaseModel): enabled: bool = True #启用 module: str = controlnet_modules.openpose.value #模式 openpose、canny等 model: str = "control_openpose-fp16 [9ca67cc5]" # 模型 weight: float = 1.0 #权重 image: str = None #图片 mask: str = None #图片遮罩,一般不用 invert_image: bool = False #反转图片 resize_mode: int = 1 #0:Just Resize 1: Inner Fit 2: Outer Fit rgbbgr_mode: bool = False lowvram: bool = False #低显存需要开启 processor_res: int = 512 threshold_a: int = 64 阈值 threshold_b: int = 64 阈值 guidance_start: float = 0.0 guidance_end: float = 1.0 guessmode: bool = False 基础参数设置agrs self.alwayson_scripts["ControlNet"] = { "args": controlnet_args } # image_cfg_scale: float = 0.72 # init_latent = None # image_mask = "" # latent_mask = None # mask_for_overlay = None # nmask = None # image_conditioning = None请求: import requests import json url = "https://stablediffusionapi.com/api/v5/controlnet" payload = json.dumps({ "key": "", "controlnet_model": "controlnet_model_id", "controlnet_type": "canny", "model_id": "midjourney", "auto_hint": "yes", "guess_mode": "no", "prompt": "a model doing photoshoot, ultra high resolution, 4K image", "negative_prompt": None, "init_image": "https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/control_images/converted/control_human_openpose.png", "mask_image": None, "width": "512", "height": "512", "samples": "1", "scheduler": "UniPCMultistepScheduler", "num_inference_steps": "30", "safety_checker": "no", "enhance_prompt": "yes", "guidance_scale": 7.5, "strength": 0.55, "lora_model": null, "tomesd": "yes", "use_karras_sigmas": "yes", "vae": None, "lora_strength": None, "embeddings_model": None, "seed": None, "webhook": None, "track_id": None }) headers = { 'Content-Type': 'application/json' } response = requests.request("POST", url, headers=headers, data=payload) print(response.text)响应: { "status": "processing", "tip": "for faster speed, keep resolution upto 512x512", "eta": 146.5279869184, "messege": "Try to fetch request after given estimated time", "fetch_result": "https://stablediffusionapi.com/api/v3/fetch/13902970", "id": 13902970, "output": "", "meta": { "prompt": "mdjrny-v4 style a model doing photoshoot, ultra high resolution, 4K image", "model_id": "midjourney", "controlnet_model": "canny", "controlnet_type": "canny", "negative_prompt": "", "scheduler": "UniPCMultistepScheduler", "safetychecker": "no", "auto_hint": "yes", "guess_mode": "no", "strength": 0.55, "W": 512, "H": 512, "guidance_scale": 3, "seed": 4016593698, "multi_lingual": "no", "init_image": "https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/control_images/converted/control_human_openpose.png", "mask_image": null, "steps": 20, "full_url": "no", "upscale": "no", "n_samples": 1, "embeddings": null, "lora": null, "outdir": "out", "file_prefix": "c8bb8efe-b437-4e94-b508-a6b4705f366a" } }多个Unitimport requests import json url = "https://stablediffusionapi.com/api/v5/controlnet" payload = json.dumps({ "key": "", "controlnet_model": "openpose,canny,face_detector",//多个model开启 "controlnet_type" :"openpose", "model_id": "midjourney", "auto_hint": "yes", "guess_mode" : "yes", "prompt": "human model doing photoshoot, ultra realistic face, ultra high resolution, 4K image", "negative_prompt": None, "control_image":"https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/control_images/converted/control_human_openpose.png", "init_image": "https://cdn.stablediffusionapi.com/generations/0-4957a91a-a45e-459e-b4cd-b3ca4013b847.png", "mask_image": None, "width": "512", "height": "512", "samples": "1", "scheduler": "UniPCMultistepScheduler", "num_inference_steps": "30", "safety_checker": "no", "enhance_prompt": "yes", "guidance_scale": 7.5, "strength": 0.55, "lora_model": "yae-miko-genshin,more_details", "clip_skip": "2", "tomesd": "yes", "use_karras_sigmas": "yes", "vae": None, "lora_strength": None, "embeddings_model": None, "seed": None, "webhook": None, "track_id": None }) headers = { 'Content-Type': 'application/json' } response = requests.request("POST", url, headers=headers, data=payload) print(response.text) { "status": "success", "generationTime": 5.408175945281982, "id": 29749517, "output": [ "https://cdn.stablediffusionapi.com/generations/0-a14940d4-9eb0-4011-8bd7-7402d3bb2629.png" ], "meta": { "prompt": "mdjrny-v4 style human model doing photoshoot, ultra realistic face, ultra high resolution, 4K image", "model_id": "midjourney", "controlnet_model": "openpose,canny,face_detector", "controlnet_type": "openpose", "negative_prompt": "", "scheduler": "UniPCMultistepScheduler", "safety_checker": "no", "auto_hint": "yes", "guess_mode": "yes", "strength": "1", "W": 512, "H": 512, "guidance_scale": 7.5, "controlnet_conditioning_scale": 1, "seed": 557761096, "multi_lingual": "no", "use_karras_sigmas": "yes", "tomesd": "yes", "init_image": "https://cdn.stablediffusionapi.com/generations/0-4957a91a-a45e-459e-b4cd-b3ca4013b847.png", "mask_image": null, "control_image": "https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/control_images/converted/control_human_openpose.png", "vae": null, "steps": 20, "full_url": "no", "upscale": "no", "n_samples": 1, "embeddings": null, "lora": "yae-miko-genshin,more_details", "lora_strength": 1, "temp": "no", "base64": "no", "clip_skip": 2, "file_prefix": "a14940d4-9eb0-4011-8bd7-7402d3bb2629.png" } }五、ControlNet 1.1 中的新功能完美支持所有 ControlNet 1.0/1.1 和 T2I 适配器型号。现在我们完美支持所有可用的型号和预处理器,包括对T2I风格适配器和ControlNet 1.1 Shuffle的完美支持。(请确保您的 YAML 文件名和模型文件名相同,另请参阅“stable-diffusion-webui\extensions\sd-webui-controlnet\models”中的 YAML 文件。 完美支持 A1111 高分辨率。修复现在,如果您在A1111中打开高分辨率修复,每个控制网络将输出两个不同的控制图像:一个小图像和一个大图像。小的用于基本生成,大的用于高分辨率修复生成。两个控制图像通过称为“超高质量控制图像重采样”的智能算法计算。默认情况下处于打开状态,您无需更改任何设置。 完美支持所有 A1111 Img2Img 或 Inpaint 设置以及所有蒙版类型现在,ControlNet 使用 A1111 的不同类型的蒙版进行了广泛测试,包括“Inpaint masked”/“Inpaint Not masked”、“Whole picture”/“Only masked”和“Only Masking”和“Mask Blur”。调整大小与A1111的“仅调整大小”/“裁剪和调整大小”/“调整大小和填充”完美匹配。这意味着您可以在 A1111 UI 的几乎任何位置轻松使用 ControlNet! 新的“像素完美”模式现在,如果您打开像素完美模式,则无需手动设置预处理器(注释器)分辨率。ControlNet 将自动为您计算最佳注释器分辨率,以便每个像素与稳定扩散完美匹配。 用户友好的 GUI 和预处理器预览我们重新组织了一些以前令人困惑的 UI,例如“新画布的画布宽度/高度”,它位于📝按钮现在。现在,预览 GUI 由“允许预览”选项和触发按钮控制💥.预览图像大小比以前更好,您无需上下滚动 - 您的 a1111 GUI 不会再乱了! 支持几乎所有升级脚本现在ControlNet 1.1可以支持几乎所有的升级/平铺方法。ControlNet 1.1支持脚本“Ultimate SD upscale”和几乎所有其他基于磁贴的扩展。请不要将“终极标清高档”与“标清高档”混淆 - 它们是不同的脚本。请注意,最推荐的升级方法是“平铺VAE/扩散”,但我们测试了尽可能多的方法/扩展。请注意,从 1.1.117 开始支持“SD 高档”,如果您使用它,则需要将所有 ControlNet 图像留空(我们不建议使用“SD 高档”,因为它有点错误且无法维护 - 请使用“终极 SD 高档”代替)。 更多控制模式(以前称为猜测模式)我们在之前的 1.0 的猜测模式中修复了许多错误,现在它被称为控制模式  现在,您可以控制哪个方面更重要(提示或控制网络): “平衡”:CFG 刻度两侧的 ControlNet,与在 ControlNet 1.0 中关闭“猜测模式”相同“我的提示更重要”:CFG量表两侧的ControlNet,SD U-Net注射逐渐减少(layer_weight*=0.825**I,其中0 |

【本文地址】