| 6 | 您所在的位置:网站首页 › scrapy五大核心组件 › 6 |

6

|

scrapy图片数据(二进制数据)爬取

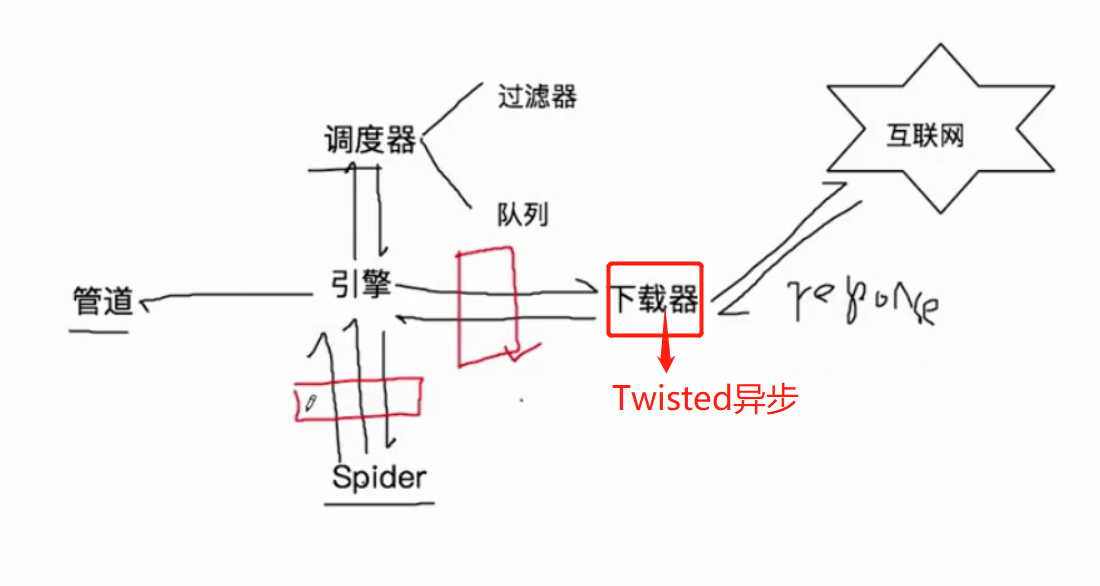

1、在爬虫文件中解析出图片地址+图片名称封装到item对象提交给管道2、在管道文件中: - from scrapy.pipelines.images import ImagesPipeline - 封装一个管道类,继承与ImagesPipeline - 重写父类的三个方法: - get_media_requests - file_path:只需要返回图片名称 - item_completed 3、在配置文件中添加如下配置: - IMAGES_STORE = '文件夹路径' xiaohua.py # -*- coding: utf-8 -*- import scrapy from xiaohuaPro.items import XiaohuaproItem class XiaohuaSpider(scrapy.Spider): name = 'xiaohua' # allowed_domains = ['www.xxx.com'] start_urls = ['http://www.521609.com/daxuemeinv/'] def parse(self, response): #图片地址+名称 li_list = response.xpath('//*[@id="content"]/div[2]/div[2]/ul/li') for li in li_list: img_src = 'http://www.521609.com'+li.xpath('./a[1]/img/@src').extract_first() img_name = li.xpath('./a[1]/img/@alt').extract_first()+'.jpg' item = XiaohuaproItem() item['img_name'] = img_name item['img_src'] = img_src yield item pipelines.py import scrapy from scrapy.pipelines.images import ImagesPipeline class XiaohuaproPipeline(ImagesPipeline): # 对图片数据进行请求发送 # 该方法参数item就是接受爬虫文件提交过来的item def get_media_requests(self, item, info): # meta可以将字典传递给file_path方法 yield scrapy.Request(item['img_src'], meta={'item': item}) # 指定图片存储的路径 def file_path(self, request, response=None, info=None): # 如何获取图片名称 item = requesta['item'] img_name = item['img_name'] return img_name # 可以将item 传递给下一个即将被执行的管道类 def item_completed(self, results, item, info): return item scrapy深度爬取:请求传参什么叫深度爬取: - 爬取的数据没有存在于同一张页面请求传参: - 在手动请求发送的时候可以通过meta参数将meta表示的字典传递给callback - 在callback通过response.meta的形式接收传递过来的meta就可以了 - 可以实现让不同的解析方法共享同一个item对象 # -*- coding: utf-8 -*- import scrapy from moviePro.items import MovieproItem class MovieSpider(scrapy.Spider): name = 'movie' # allowed_domains = ['www.xxx.com'] start_urls = ['https://www.4567kan.com/index.php/vod/show/id/1.html'] url_model = 'https://www.4567kan.com/index.php/vod/show/id/%d.html' page = 2 # 解析首页数据 def parse(self, response): li_list = response.xpath('/html/body/div[1]/div/div/div/div[2]/ul/li') for li in li_list: title = li.xpath('./div/a/@title').extract_first() item = MovieproItem() item['title'] = title detail_url = 'https://www.4567kan.com' + li.xpath('./div/a/@href').extract_first() # 对详情页发起请求 # 请求传参:meta字典可以传递给callback yield scrapy.Request(url=detail_url, callback=self.parse_detail, meta={'item': item}) if self.page < 6: new_url = format(self.url_model % self.page) self.page += 1 yield scrapy.Request(url=new_url, callback=self.parse) # 解析详情页的数据 def parse_detail(self, response): item = response.meta['item'] desc = response.xpath('/html/body/div[1]/div/div/div/div[2]/p[5]/span[2]/text()').extract_first() item['desc'] = desc yield item scrapy五大核心组件引擎(Scrapy) 用来处理整个系统的数据流处理, 触发事务(框架核心) 调度器(Scheduler) 用来接受引擎发过来的请求, 压入队列中, 并在引擎再次请求的时候返回. 可以想像成一个URL(抓取网页的网址或者说是链接)的优先队列, 由它来决定下一个要抓取的网址是什么, 同时去除重复的网址 下载器(Downloader) 用于下载网页内容, 并将网页内容返回给蜘蛛(Scrapy下载器是建立在twisted这个高效的异步模型上的) 爬虫(Spiders) 爬虫是主要干活的, 用于从特定的网页中提取自己需要的信息, 即所谓的实体(Item)。用户也可以从中提取出链接,让Scrapy继续抓取下一个页面 项目管道(Pipeline) 负责处理爬虫从网页中抽取的实体,主要的功能是持久化实体、验证实体的有效性、清除不需要的信息。当页面被爬虫解析后,将被发送到项目管道,并经过几个特定的次序处理数据。 爬虫中间件下载中间件(*) - 作用:可以批量拦截框架中发起的所有请求和响应 - 拦截请求干什么 - 请求头的篡改 - UA - 给请求设置代理 - 写在process_exception: - requesta['proxy'] = 'http://ip:port' - 拦截响应干什么 - 为了篡改响应数据 - 我们请求到的一组不符合需求响应数据,我们就需要将响应进行拦截,将不满足 需求的响应数据篡改为满足需求响应数据。 配置中需要解开对应中间件代码的注释 DOWNLOADER_MIDDLEWARES = { 'middlePro.middlewares.MiddleproDownloaderMiddleware': 543, } middleware.py from scrapy import signals import random user_agent_list = [ "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 " "(KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1", "Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 " "(KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 " "(KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 " "(KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6", "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 " "(KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 " "(KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5", "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 " "(KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 " "(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24", "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 " "(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24" ] https_proxy = [ '1.1.1.1:8888', '2.2.2.2:8880', ] http_proxy = [ '11.11.11.11:8888', '21.21.21.21:8880', ] class MiddleproDownloaderMiddleware: # 拦截所有的请求 # 所有的请求:正常的请求,异常的请求 # 参数:request就是拦截到的请求 def process_request(self, request, spider): # 尽可能给每一个请求对象赋值一个不同的请求载体身份标识 request.headers['User-Agent'] = random.choice(user_agent_list) print('i am process_request:'+request.url) return None # 拦截所有的响应 # response:拦截到的响应 # request:拦截到响应对应的请求对象 def process_response(self, request, response, spider): print('i am process_response:',response) return response # 拦截发生异常的请求对象 # 拦截到异常的请求是为什么? # 可以将异常的请求进行修正,然后让其进行重新发送 def process_exception(self, request, exception, spider): print('i am process_exception:',request.url) # print('异常请求的异常信息::',exception) # 如何对请求进行重新发送 # return request即可 # 设置代理 if request.url.split(':')[0] == 'https': requesta['proxy'] = random.choice(https_proxy) else: requesta['proxy'] = random.choice(http_proxy) return request 网易新闻爬虫(中间件使用案例) wangyi.py # -*- coding: utf-8 -*- import scrapy from selenium import webdriver from wangyiPro.items import WangyiproItem class WangyiSpider(scrapy.Spider): name = 'wangyi' # allowed_domains = ['www.xxx.com'] start_urls = ['https://news.163.com/'] model_urls = [] # 实例化浏览器对象 bro = webdriver.Chrome('/Users/bobo/Desktop/29期授课相关/day06/chromedriver') # 用来解析五个板块对应的url def parse(self, response): li_list = response.xpath('//*[@id="index2016_wrap"]/div[1]/div[2]/div[2]/div[2]/div[2]/div/ul/li') model_index = [3, 4, 6, 7, 8] for index in model_index: li = li_list[index] model_url = li.xpath('./a/@href').extract_first() self.model_urls.append(model_url) # 对每一个板块对应的url进行请求发送 for url in self.model_urls: yield scrapy.Request(url=url, callback=self.parse_model) # 用来解析每一个板块对应页面中的数据 # 每一个板块对应的新闻数据是动态加载 def parse_model(self, response): # 解析新闻的标题和新闻详情页的url # 遇到了不满足需求的响应对象 div_list = response.xpath('/html/body/div/div[3]/div[4]/div[1]/div/div/ul/li/div/div') for div in div_list: title = div.xpath('./div/div[1]/h3/a/text()').extract_first() new_detail_url = div.xpath('./div/div[1]/h3/a/@href').extract_first() if new_detail_url: item = WangyiproItem() item['title'] = title yield scrapy.Request(url=new_detail_url, callback=self.parse_new, meta={'item': item}) # 解析每一条新闻详情页的新闻内容 def parse_new(self, response): item = response.meta['item'] content = response.xpath('//*[@id="endText"]//text()').extract() content = ''.join(content) item['content'] = content yield item # 重写父类方法 def closed(self, spider): print('整个操作结束!!!') self.bro.quit() middlewares.py from time import sleep from scrapy.http import HtmlResponse class WangyiproDownloaderMiddleware: def process_request(self, request, spider): return None def process_response(self, request, response, spider): # 拦截指定的响应对象,将其进行篡改 bro = spider.bro if request.url in spider.model_urls: # response就是五个板块对应响应对象 # 获取满足需求的响应数据(selenium) bro.get(request.url) sleep(2) bro.execute_script('window.scrollTo(0,document.body.scrollHeight)') sleep(1) # 满足需求的响应数据 page_text = bro.page_source # 实例化一个新的响应对象,将page_text作为新响应对象的响应数据 new_response = HtmlResponse(url=request.url, body=page_text, request=request, encoding='utf-8') return new_response else: return response def process_exception(self, request, exception, spider): pass

|

【本文地址】