| pytorch识别CIFAR10:训练ResNet | 您所在的位置:网站首页 › cifar100分类识别率 › pytorch识别CIFAR10:训练ResNet |

pytorch识别CIFAR10:训练ResNet

|

版权声明:本文为博主原创文章,欢迎转载,并请注明出处。联系方式:[email protected] CNN的层数越多,能够提取到的特征越丰富,但是简单地增加卷积层数,训练时会导致梯度弥散或梯度爆炸。 何凯明2015年提出了残差神经网络,即Reset,并在ILSVRC-2015的分类比赛中获得冠军。 ResNet可以有效的消除卷积层数增加带来的梯度弥散或梯度爆炸问题。 ResNet的核心思想是网络输出分为2部分恒等映射(identity mapping)、残差映射(residual mapping),即y = x + F(x),图示如下:

ResNet通过改变学习目标,即由学习完整的输出变为学习残差,解决了传统卷积在信息传递时存在的信息丢失核损耗问题,通过将输入直接绕道传递到输出,保护了信息的完整性。此外学习目标的简化也降低了学习难度。 常见的ResNet结构有:

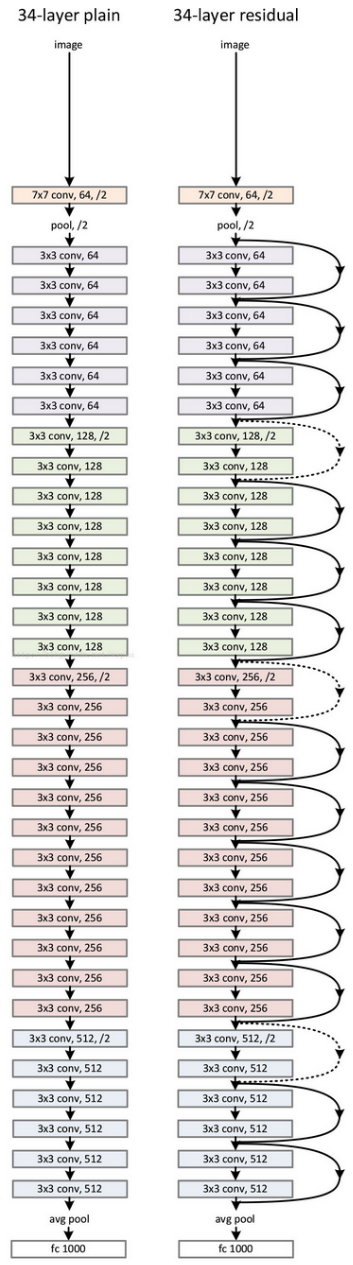

34层的ResNet图示如下:

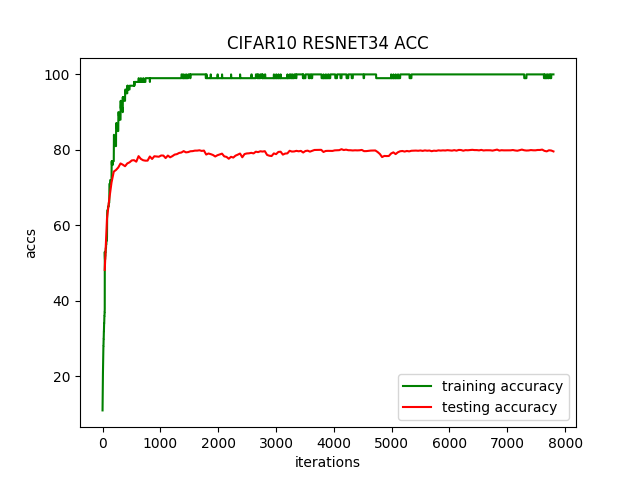

pytorch实现核训练ResNet-34的代码如下: 1 # -*- coding:utf-8 -*- 2 3 u"""ResNet训练学习CIFAR10""" 4 5 __author__ = 'zhengbiqing [email protected]' 6 7 8 import torch as t 9 import torchvision as tv 10 import torch.nn as nn 11 import torch.optim as optim 12 import torchvision.transforms as transforms 13 from torchvision.transforms import ToPILImage 14 import torch.backends.cudnn as cudnn 15 16 import matplotlib.pyplot as plt 17 18 import datetime 19 import argparse 20 21 22 # 样本读取线程数 23 WORKERS = 4 24 25 # 网络参赛保存文件名 26 PARAS_FN = 'cifar_resnet_params.pkl' 27 28 # minist数据存放位置 29 ROOT = '/home/zbq/PycharmProjects/cifar' 30 31 # 目标函数 32 loss_func = nn.CrossEntropyLoss() 33 34 # 最优结果 35 best_acc = 0 36 37 # 记录准确率,显示曲线 38 global_train_acc = [] 39 global_test_acc = [] 40 41 42 ''' 43 残差块 44 in_channels, out_channels:残差块的输入、输出通道数 45 对第一层,in out channel都是64,其他层则不同 46 对每一层,如果in out channel不同, stride是1,其他层则为2 47 ''' 48 class ResBlock(nn.Module): 49 def __init__(self, in_channels, out_channels, stride=1): 50 super(ResBlock, self).__init__() 51 52 # 残差块的第一个卷积 53 # 通道数变换in->out,每一层(除第一层外)的第一个block 54 # 图片尺寸变换:stride=2时,w-3+2 / 2 + 1 = w/2,w/2 * w/2 55 # stride=1时尺寸不变,w-3+2 / 1 + 1 = w 56 self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=3, stride=stride, padding=1) 57 self.bn1 = nn.BatchNorm2d(out_channels) 58 self.relu = nn.ReLU(inplace=True) 59 60 # 残差块的第二个卷积 61 # 通道数、图片尺寸均不变 62 self.conv2 = nn.Conv2d(out_channels, out_channels, kernel_size=3, stride=1, padding=1) 63 self.bn2 = nn.BatchNorm2d(out_channels) 64 65 # 残差块的shortcut 66 # 如果残差块的输入输出通道数不同,则需要变换通道数及图片尺寸,以和residual部分相加 67 # 输出:通道数*2 图片尺寸/2 68 if in_channels != out_channels: 69 self.downsample = nn.Sequential( 70 nn.Conv2d(in_channels, out_channels, kernel_size=1, stride=2), 71 nn.BatchNorm2d(out_channels) 72 ) 73 else: 74 # 通道数相同,无需做变换,在forward中identity = x 75 self.downsample = None 76 77 def forward(self, x): 78 identity = x 79 80 out = self.conv1(x) 81 out = self.bn1(out) 82 out = self.relu(out) 83 84 out = self.conv2(out) 85 out = self.bn2(out) 86 87 if self.downsample is not None: 88 identity = self.downsample(x) 89 90 out += identity 91 out = self.relu(out) 92 93 return out 94 95 96 ''' 97 定义网络结构 98 ''' 99 class ResNet34(nn.Module): 100 def __init__(self, block): 101 super(ResNet34, self).__init__() 102 103 # 初始卷积层核池化层 104 self.first = nn.Sequential( 105 # 卷基层1:7*7kernel,2stride,3padding,outmap:32-7+2*3 / 2 + 1,16*16 106 nn.Conv2d(3, 64, 7, 2, 3), 107 nn.BatchNorm2d(64), 108 nn.ReLU(inplace=True), 109 110 # 最大池化,3*3kernel,1stride(32的原始输入图片较小,不再缩小尺寸),1padding, 111 # outmap:16-3+2*1 / 1 + 1,16*16 112 nn.MaxPool2d(3, 1, 1) 113 ) 114 115 # 第一层,通道数不变 116 self.layer1 = self.make_layer(block, 64, 64, 3, 1) 117 118 # 第2、3、4层,通道数*2,图片尺寸/2 119 self.layer2 = self.make_layer(block, 64, 128, 4, 2) # 输出8*8 120 self.layer3 = self.make_layer(block, 128, 256, 6, 2) # 输出4*4 121 self.layer4 = self.make_layer(block, 256, 512, 3, 2) # 输出2*2 122 123 self.avg_pool = nn.AvgPool2d(2) # 输出512*1 124 self.fc = nn.Linear(512, 10) 125 126 def make_layer(self, block, in_channels, out_channels, block_num, stride): 127 layers = [] 128 129 # 每一层的第一个block,通道数可能不同 130 layers.append(block(in_channels, out_channels, stride)) 131 132 # 每一层的其他block,通道数不变,图片尺寸不变 133 for i in range(block_num - 1): 134 layers.append(block(out_channels, out_channels, 1)) 135 136 return nn.Sequential(*layers) 137 138 def forward(self, x): 139 x = self.first(x) 140 x = self.layer1(x) 141 x = self.layer2(x) 142 x = self.layer3(x) 143 x = self.layer4(x) 144 x = self.avg_pool(x) 145 146 # x.size()[0]: batch size 147 x = x.view(x.size()[0], -1) 148 x = self.fc(x) 149 150 return x 151 152 153 ''' 154 训练并测试网络 155 net:网络模型 156 train_data_load:训练数据集 157 optimizer:优化器 158 epoch:第几次训练迭代 159 log_interval:训练过程中损失函数值和准确率的打印频率 160 ''' 161 def net_train(net, train_data_load, optimizer, epoch, log_interval): 162 net.train() 163 164 begin = datetime.datetime.now() 165 166 # 样本总数 167 total = len(train_data_load.dataset) 168 169 # 样本批次训练的损失函数值的和 170 train_loss = 0 171 172 # 识别正确的样本数 173 ok = 0 174 175 for i, data in enumerate(train_data_load, 0): 176 img, label = data 177 img, label = img.cuda(), label.cuda() 178 179 optimizer.zero_grad() 180 181 outs = net(img) 182 loss = loss_func(outs, label) 183 loss.backward() 184 optimizer.step() 185 186 # 累加损失值和训练样本数 187 train_loss += loss.item() 188 189 _, predicted = t.max(outs.data, 1) 190 # 累加识别正确的样本数 191 ok += (predicted == label).sum() 192 193 if (i + 1) % log_interval == 0: 194 # 训练结果输出 195 196 # 已训练的样本数 197 traind_total = (i + 1) * len(label) 198 199 # 准确度 200 acc = 100. * ok / traind_total 201 202 # 记录训练准确率以输出变化曲线 203 global_train_acc.append(acc) 204 205 end = datetime.datetime.now() 206 print('one epoch spend: ', end - begin) 207 208 209 ''' 210 用测试集检查准确率 211 ''' 212 def net_test(net, test_data_load, epoch): 213 net.eval() 214 215 ok = 0 216 217 for i, data in enumerate(test_data_load): 218 img, label = data 219 img, label = img.cuda(), label.cuda() 220 221 outs = net(img) 222 _, pre = t.max(outs.data, 1) 223 ok += (pre == label).sum() 224 225 acc = ok.item() * 100. / (len(test_data_load.dataset)) 226 print('EPOCH:{}, ACC:{}\n'.format(epoch, acc)) 227 228 # 记录测试准确率以输出变化曲线 229 global_test_acc.append(acc) 230 231 # 最好准确度记录 232 global best_acc 233 if acc > best_acc: 234 best_acc = acc 235 236 237 ''' 238 显示数据集中一个图片 239 ''' 240 def img_show(dataset, index): 241 classes = ('plane', 'car', 'bird', 'cat', 242 'deer', 'dog', 'frog', 'horse', 'ship', 'truck') 243 244 show = ToPILImage() 245 246 data, label = dataset[index] 247 print('img is a ', classes[label]) 248 show((data + 1) / 2).resize((100, 100)).show() 249 250 251 ''' 252 显示训练准确率、测试准确率变化曲线 253 ''' 254 def show_acc_curv(ratio): 255 # 训练准确率曲线的x、y 256 train_x = list(range(len(global_train_acc))) 257 train_y = global_train_acc 258 259 # 测试准确率曲线的x、y 260 # 每ratio个训练准确率对应一个测试准确率 261 test_x = train_x[ratio-1::ratio] 262 test_y = global_test_acc 263 264 plt.title('CIFAR10 RESNET34 ACC') 265 266 plt.plot(train_x, train_y, color='green', label='training accuracy') 267 plt.plot(test_x, test_y, color='red', label='testing accuracy') 268 269 # 显示图例 270 plt.legend() 271 plt.xlabel('iterations') 272 plt.ylabel('accs') 273 274 plt.show() 275 276 277 def main(): 278 # 训练超参数设置,可通过命令行设置 279 parser = argparse.ArgumentParser(description='PyTorch CIFA10 ResNet34 Example') 280 parser.add_argument('--batch-size', type=int, default=128, metavar='N', 281 help='input batch size for training (default: 128)') 282 parser.add_argument('--test-batch-size', type=int, default=100, metavar='N', 283 help='input batch size for testing (default: 100)') 284 parser.add_argument('--epochs', type=int, default=200, metavar='N', 285 help='number of epochs to train (default: 200)') 286 parser.add_argument('--lr', type=float, default=0.1, metavar='LR', 287 help='learning rate (default: 0.1)') 288 parser.add_argument('--momentum', type=float, default=0.9, metavar='M', 289 help='SGD momentum (default: 0.9)') 290 parser.add_argument('--log-interval', type=int, default=10, metavar='N', 291 help='how many batches to wait before logging training status (default: 10)') 292 parser.add_argument('--no-train', action='store_true', default=False, 293 help='If train the Model') 294 parser.add_argument('--save-model', action='store_true', default=False, 295 help='For Saving the current Model') 296 args = parser.parse_args() 297 298 # 图像数值转换,ToTensor源码注释 299 """Convert a ``PIL Image`` or ``numpy.ndarray`` to tensor. 300 Converts a PIL Image or numpy.ndarray (H x W x C) in the range 301 [0, 255] to a torch.FloatTensor of shape (C x H x W) in the range [0.0, 1.0]. 302 """ 303 # 归一化把[0.0, 1.0]变换为[-1,1], ([0, 1] - 0.5) / 0.5 = [-1, 1] 304 transform = tv.transforms.Compose([ 305 transforms.ToTensor(), 306 transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])]) 307 308 # 定义数据集 309 train_data = tv.datasets.CIFAR10(root=ROOT, train=True, download=True, transform=transform) 310 test_data = tv.datasets.CIFAR10(root=ROOT, train=False, download=False, transform=transform) 311 312 train_load = t.utils.data.DataLoader(train_data, batch_size=args.batch_size, shuffle=True, num_workers=WORKERS) 313 test_load = t.utils.data.DataLoader(test_data, batch_size=args.test_batch_size, shuffle=False, num_workers=WORKERS) 314 315 net = ResNet34(ResBlock).cuda() 316 print(net) 317 318 # 并行计算提高运行速度 319 net = nn.DataParallel(net) 320 cudnn.benchmark = True 321 322 # 如果不训练,直接加载保存的网络参数进行测试集验证 323 if args.no_train: 324 net.load_state_dict(t.load(PARAS_FN)) 325 net_test(net, test_load, 0) 326 return 327 328 optimizer = optim.SGD(net.parameters(), lr=args.lr, momentum=args.momentum) 329 330 start_time = datetime.datetime.now() 331 332 for epoch in range(1, args.epochs + 1): 333 net_train(net, train_load, optimizer, epoch, args.log_interval) 334 335 # 每个epoch结束后用测试集检查识别准确度 336 net_test(net, test_load, epoch) 337 338 end_time = datetime.datetime.now() 339 340 global best_acc 341 print('CIFAR10 pytorch ResNet34 Train: EPOCH:{}, BATCH_SZ:{}, LR:{}, ACC:{}'.format(args.epochs, args.batch_size, args.lr, best_acc)) 342 print('train spend time: ', end_time - start_time) 343 344 # 每训练一个迭代记录的训练准确率个数 345 ratio = len(train_data) / args.batch_size / args.log_interval 346 ratio = int(ratio) 347 348 # 显示曲线 349 show_acc_curv(ratio) 350 351 if args.save_model: 352 t.save(net.state_dict(), PARAS_FN) 353 354 355 if __name__ == '__main__': 356 main()运行结果: Files already downloaded and verifiedResNet34( (first): Sequential( (0): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3)) (1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU(inplace) (3): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False) ) (layer1): Sequential( (0): ResBlock( (conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace) (conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) (1): ResBlock( (conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace) (conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) (2): ResBlock( (conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace) (conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (layer2): Sequential( (0): ResBlock( (conv1): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1)) (bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace) (conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (downsample): Sequential( (0): Conv2d(64, 128, kernel_size=(1, 1), stride=(2, 2)) (1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (1): ResBlock( (conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace) (conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) (2): ResBlock( (conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace) (conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) (3): ResBlock( (conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace) (conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (layer3): Sequential( (0): ResBlock( (conv1): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1)) (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace) (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (downsample): Sequential( (0): Conv2d(128, 256, kernel_size=(1, 1), stride=(2, 2)) (1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (1): ResBlock( (conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace) (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) (2): ResBlock( (conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace) (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) (3): ResBlock( (conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace) (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) (4): ResBlock( (conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace) (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) (5): ResBlock( (conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace) (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (layer4): Sequential( (0): ResBlock( (conv1): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1)) (bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace) (conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (downsample): Sequential( (0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2)) (1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (1): ResBlock( (conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace) (conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) (2): ResBlock( (conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace) (conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (avg_pool): AvgPool2d(kernel_size=2, stride=2, padding=0) (fc): Linear(in_features=512, out_features=10, bias=True))one epoch spend: 0:00:23.971338EPOCH:1, ACC:48.54 one epoch spend: 0:00:22.339190EPOCH:2, ACC:61.06 ...... one epoch spend: 0:00:22.023034EPOCH:199, ACC:79.84 one epoch spend: 0:00:22.057692EPOCH:200, ACC:79.6 CIFAR10 pytorch ResNet34 Train: EPOCH:200, BATCH_SZ:128, LR:0.1, ACC:80.19train spend time: 1:18:40.948080 运行200个迭代,每个迭代耗时22秒,准确率不高,只有80%。准确率变化曲线如下:

|

【本文地址】