| HBase的编程实践(实验3 | 您所在的位置:网站首页 › api编程hbase创建表实验分析 › HBase的编程实践(实验3 |

HBase的编程实践(实验3

|

作者:一乐乐 欢迎大家来一乐乐的博客园

HBase的编程实践 ✿ 准备工作:启动、关闭hbase之类的(以及该过程可能遇到的bug) 一、Hbase中使用Shell命令: ① HBase中创建表:(create 命令:第一个变量是表名,然后是列族名) ✿ 增加数据 ② put:(put 命令:第一个变量是表名,第二个变量是行键,第三个变量开始就是添加列族情况啦(列限定符可选) ✿ 删除数据 ③ delete命令: □ put 的反向操作: □ 删除 一行中的所有数据: □ 删除 表: ✿ 查看数据: □ get: 查看的是一行中的数据 □ scan: 查看的是表中的全部数据 ✿ 查询表历史数据: ④ 查询表的历史版本,需要两步。 ✿ 退出HBase数据库操作: ⑤ 命令: exit 二、Hbase编程实践:✿ 准备工作:导入jar包: 例子:创建表,插入数据、查看表中数据

三、实验:熟悉常用的HBase 操作: (一)编程实现以下指定功能,并用 Hadoop 提供的 HBase Shell 命令完成相同任务: 列出 HBase 所有的表的相关信息,例如表名; 在终端打印出指定的表的所有记录数据; 向已经创建好的表添加和删除指定的列族或列; 清空指定的表的所有记录数据; 统计表的行数。(二)现有以下关系型数据库中的表和数据,要求将其转换为适合于HBase存储的表并插入数据: 学生表(Student)、课程表(Course)、选课表(SC):同时,请编程完成以下指定功能: ....

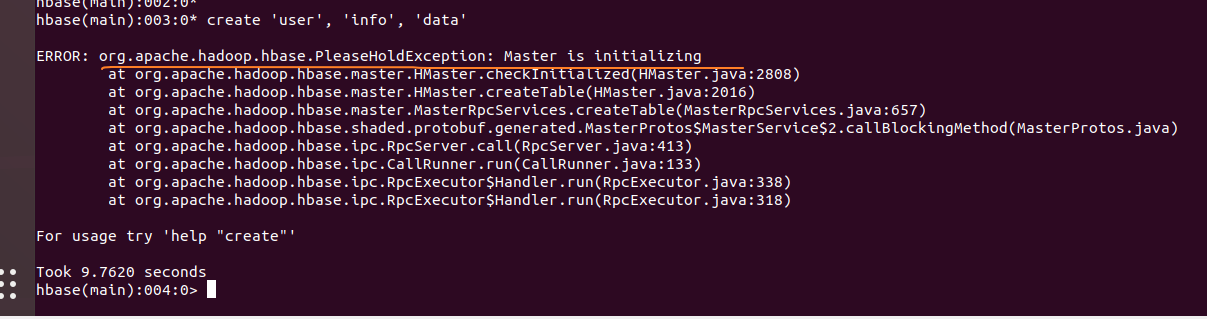

✿ 准备工作: ■ 先启动hadoop,再启动hbase;(关闭:关闭先关hbase,再关闭hadoop) □ 启动hadoop: ssh localhost cd /usr/local/hadoop ./sbin/start-dfs.sh□ 启动hbase:(因为hbase 咱将其bin 目录配置了环境变量,相当于全局变量了,在终端命令可以直接使用, 而hadoop 没有配置系统的全局变量,所以需要切换到其安装目录下的sbin 目录) start-hbase.sh□ 进入shell界面: hbase shell□关闭hbase: stop-hbase.sh□关闭hadoop: cd /usr/local/hadoop ./sbin/stop-dfs.sh可能遇到bug:org.apache.hadoop.hbase.PleaseHoldException:Maste is initializing

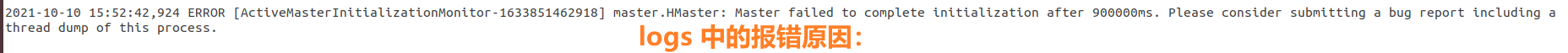

logs中报错:master.HMaster: Master failed to complete initialization after 900000ms. Please consider submitting a bug report including a thread dump of this process.

master.SplitLogManger:error while splitting logs in [hdfs:// localhost:9000/hbase/WALS...]

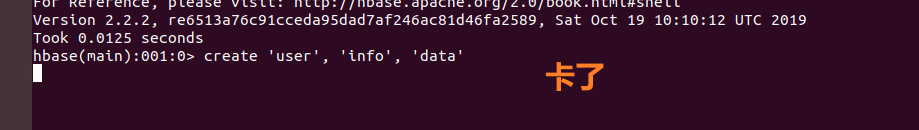

屋漏偏逢连夜雨:又卡住。。。。。

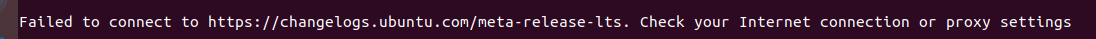

解决:ctr+c,关闭hbase、关闭hadoop,然后,关掉shell,重启,然后重新登录。。。(ssh localhost 又发现。。。,直觉,这个问题无伤大雅,跳过它) Failed to connect to https://changelogs.ubuntu.com/meta-relese-lts.Check your Internet connection or proxy settings.

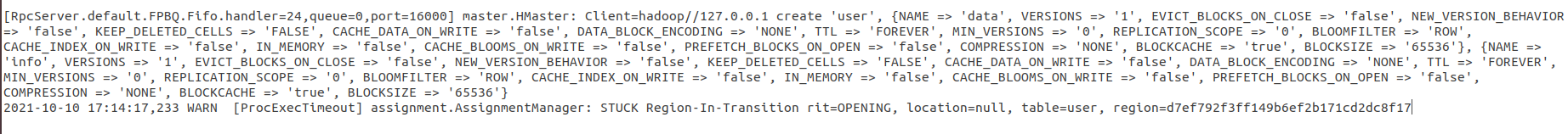

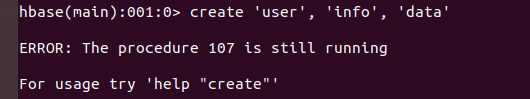

诡异的事。。。(前一秒成功创建了一张表,下一秒卡死)

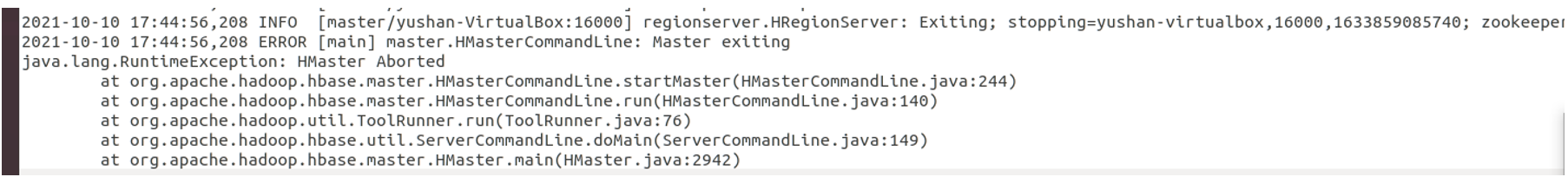

日志显示:【诡异的地方是在,我创建的表格名为user 就卡住,创建叫其他名字的表格就没事】 警告:WARN[ProcExecTimeout] assignment.AssignmentManger:STUCK Region-In-Transition rit=OPENING, location=null,table=user,region=d7e... 然后又:ERROR:master.HMasterCommandLine:Master exiting。。。

至此越搞越多bug,我选择重新安装(因为重新安装对于解决问题的是一个优解,为什么不重新安装呢,办法没有高低贵贱,高效即最好。) 也许一开始的原因在于删除表的命令输入先后顺序有问题,我是先输入:truncate 'user' (‘user’ 是表名) 然后输入 drop 'user' #停用表之后才能删除 (好像输入 drop命令前已经停表了哈哈哈)问题不大,重新装一下即可 ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

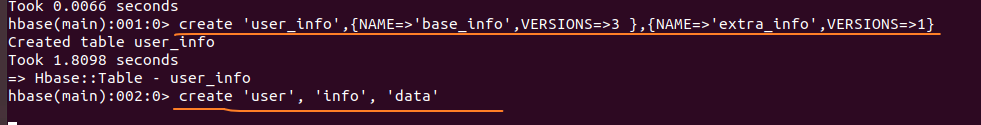

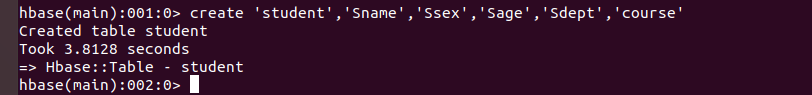

一、Hbase中使用Shell命令: ① HBase中创建表:(create 命令:第一个变量是表名,然后是列族名) 语法:create '表名称','列族名称1','列族名称2','列族名称N' create 'student','Sname','Ssex','Sage','Sdept','course'

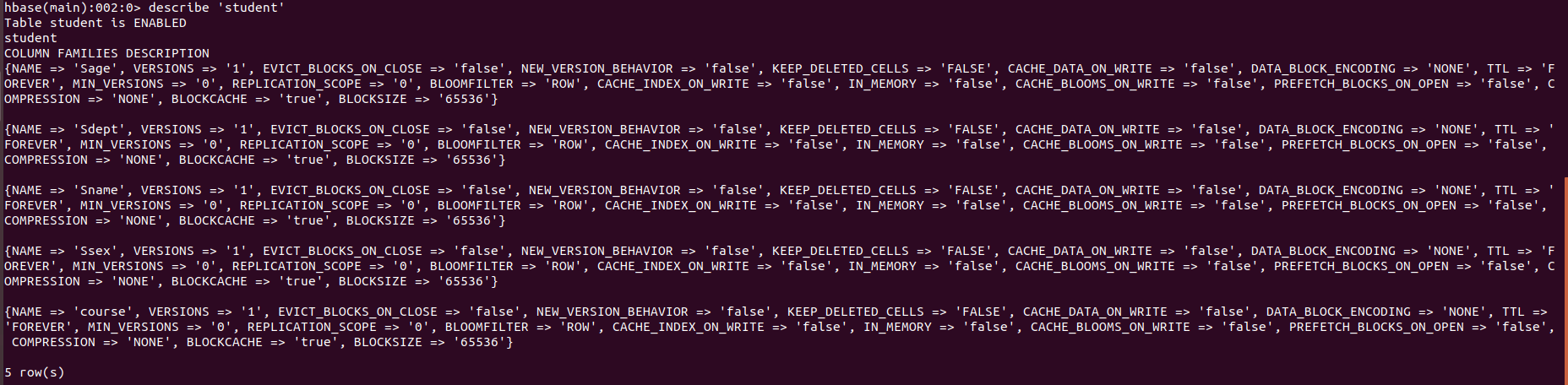

通过命令 describe 'student' 进行查看表的结构:(desc ‘表名’,查看表的结构)

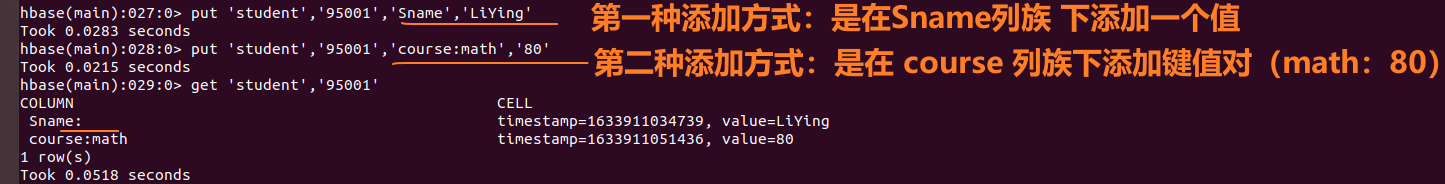

接下来是hbase 常规操作(增删改查) ✿ 增加数据 ② put:(put 命令:第一个变量是表名,第二个变量是行键,第三个变量开始就是添加列族情况啦(列限定符可选) 注意 put 命令:一次只能为一个表的一行数据的一个列,也就是一次只能给一个单元格添加一个数据, 所以直接用shell命令插入数据效率很低,在实际应用中,一般都是利用编程操作数据。 语法:put '表名称','行名称','列名称:','值' 例子:student表添加了学号为95001,名字为LiYing的一行数据,其行键为95001。 put 'student', '95001','Sname','LiYing'例子:为95001行下的course列族的math列添加了一个数据: put 'student','95001','course:math','80'

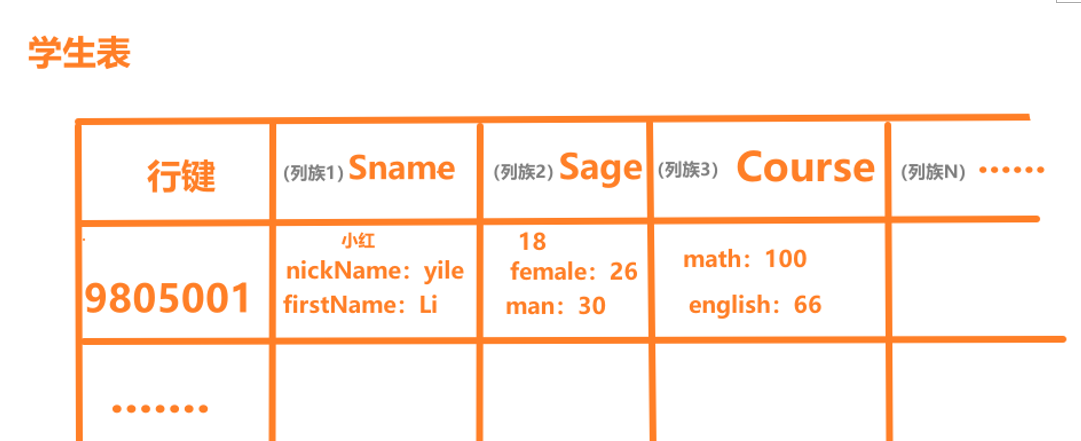

在表格中的样子(大概如此,变量名不一定对得上哦,我只是为了展示表格的形式):

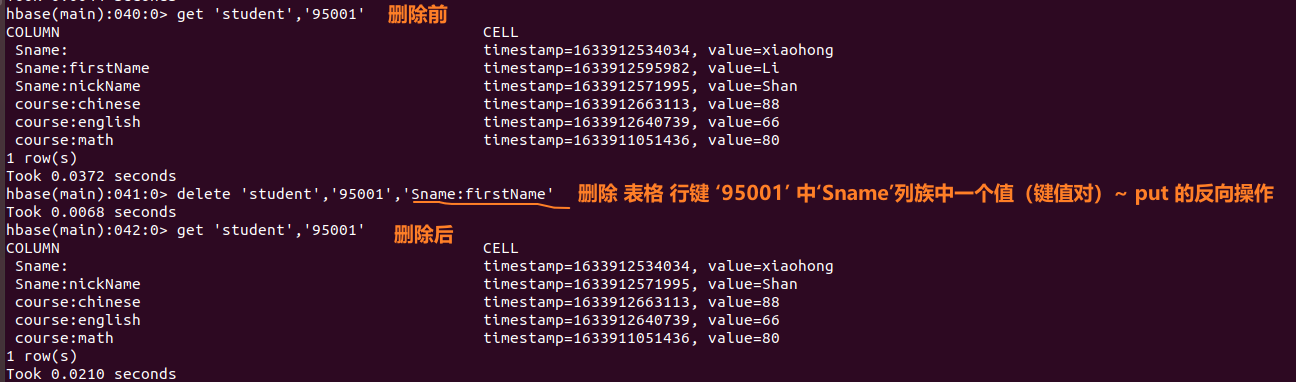

✿ 删除数据 ③ delete命令: □ put 的反向操作: □ 删除 一行中的所有数据: □ 删除 表: ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ □ put 的反向操作: delete 'student','95001','Sname:firstName'

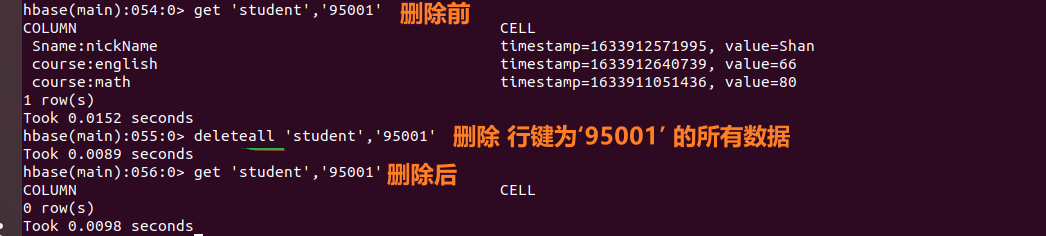

□ 删除 一行中的所有数据: deleteall 'student','95001'

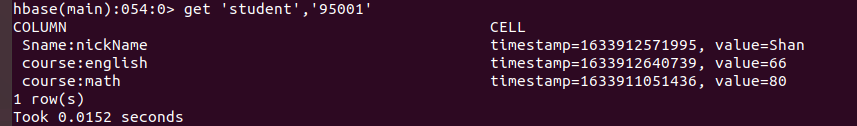

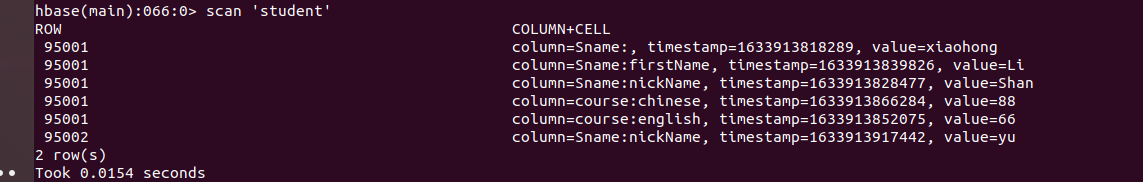

□ 删除表 : disable 'student' #让表不可用 drop 'student' #删除表✿ 查看数据: □ get: 查看的是一行中的数据 □ scan: 查看的是表中的全部数据 get 'student','95001'

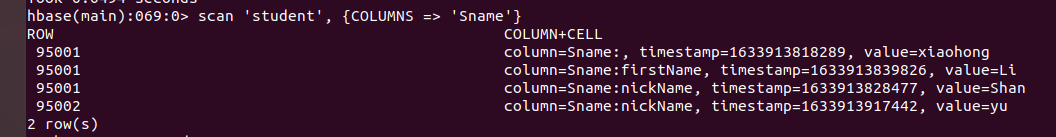

还可以查询部分细节的数据等等细节的数据,例如:查询 某个列族的数据:

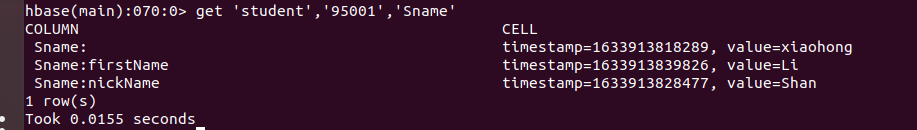

✿ 查询表历史数据: ④ 查询表的历史版本,需要两步。1、在创建表的时候,指定保存的版本数(假设指定为5) create 'teacher',{NAME=>'username',VERSIONS=>5}2、插入数据然后更新数据,使其产生历史版本数据,注意:这里插入数据和更新数据都是用put命令 put 'teacher','91001','username','Mary' put 'teacher','91001','username','Mary1' put 'teacher','91001','username','Mary2' put 'teacher','91001','username','Mary3' put 'teacher','91001','username','Mary4' put 'teacher','91001','username','Mary5'3、查询时,指定查询的历史版本数。默认会查询出最新的数据 get 'teacher','91001',{COLUMN=>'username',VERSIONS=>3}

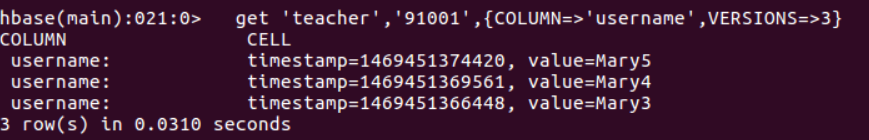

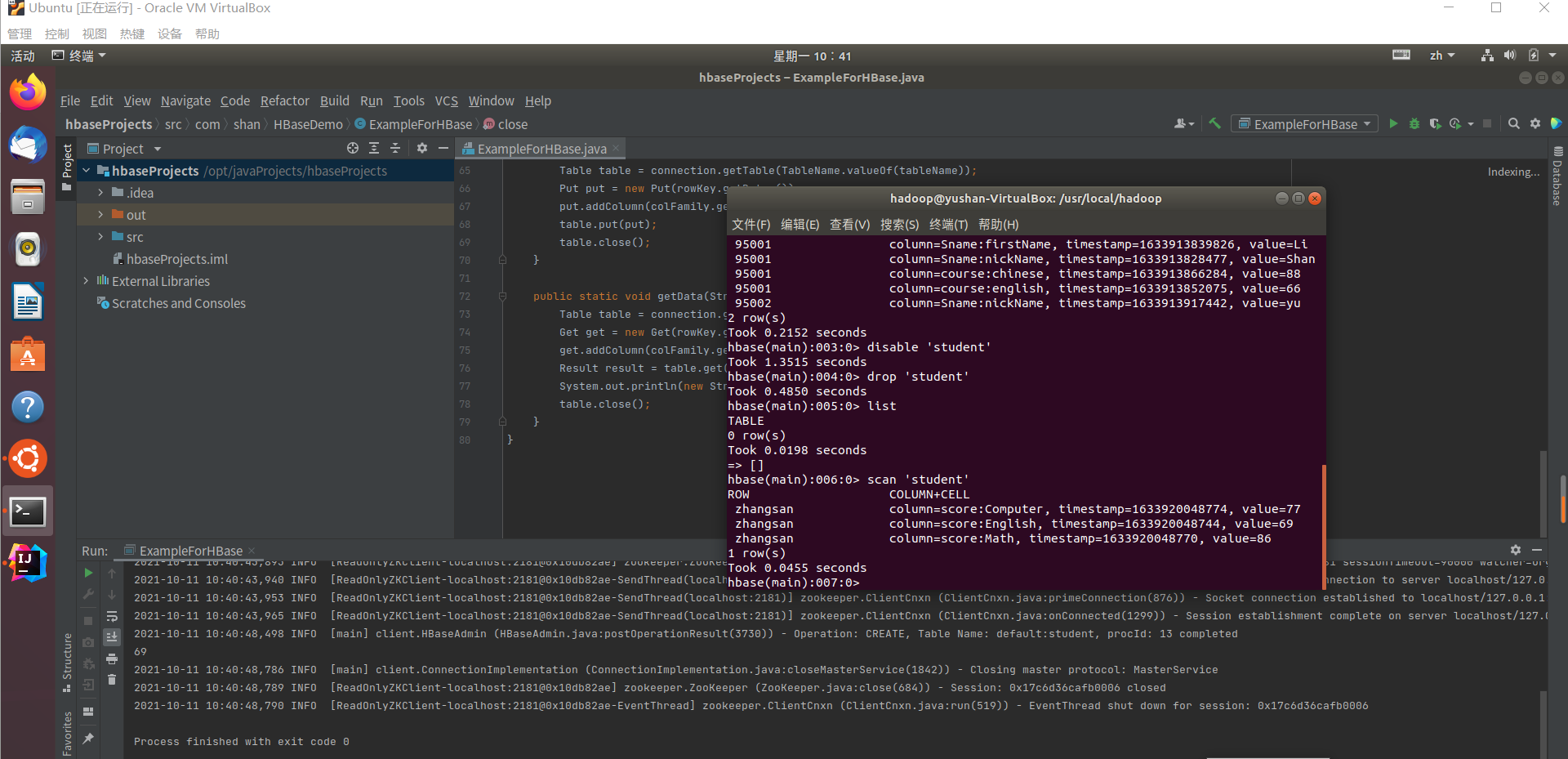

✿ 退出HBase数据库操作 ⑤ 命令: exit 注意:这里退出HBase数据库是退出对数据库表的操作,而不是停止启动HBase数据库后台运行。 二、Hbase编程实践: ✿ 准备工作:导入jar包: 导包步骤:File -》 Project Structure -》Libraries -》+ -》选择需要导入的包,然后记得导入完成后,点击一下 Apply,再点 Ok (1) 进入到“/usr/local/hbase/lib”目录,选中该目录下的所有jar文件(注意,不要选中client-facing-thirdparty、ruby、shaded-clients和zkcli这四个目录) (2) 进入到“/usr/local/hbase/lib/client-facing-thirdparty”目录, 选中该目录下的所有jar文件。 例子:创建表,插入数据,查看表中数据 import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.*; import org.apache.hadoop.hbase.client.*; import org.apache.hadoop.hbase.util.Bytes; import java.io.IOException; public class ExampleForHBase { public static Configuration configuration; public static Connection connection; public static Admin admin; public static void main(String[] args)throws IOException{ init(); //主要操作就是为了连接到数据库hbase createTable("student",new String[]{"score"}); //创建表,shell命令:create '表名','列族名1','列族名2','列族名3' ... insertData("student","zhangsan","score","English","69"); //shell命令: put 'student','张三','score:English','69' insertData("student","zhangsan","score","Math","86"); insertData("student","zhangsan","score","Computer","77"); getData("student", "zhangsan", "score","English"); close(); } public static void init(){ configuration = HBaseConfiguration.create(); configuration.set("hbase.rootdir","hdfs://localhost:9000/hbase"); try{ connection = ConnectionFactory.createConnection(configuration); admin = connection.getAdmin(); }catch (IOException e){ e.printStackTrace(); } } public static void close(){ try{ if(admin != null){ admin.close(); } if(null != connection){ connection.close(); } }catch (IOException e){ e.printStackTrace(); } } public static void createTable(String myTableName,String[] colFamily) throws IOException { TableName tableName = TableName.valueOf(myTableName); if(admin.tableExists(tableName)){ System.out.println("talbe is exists!"); }else { TableDescriptorBuilder tableDescriptor = TableDescriptorBuilder.newBuilder(tableName); for(String str:colFamily){ ColumnFamilyDescriptor family = ColumnFamilyDescriptorBuilder.newBuilder(Bytes.toBytes(str)).build(); tableDescriptor.setColumnFamily(family); } admin.createTable(tableDescriptor.build()); } } public static void insertData(String tableName,String rowKey,String colFamily,String col,String val) throws IOException { Table table = connection.getTable(TableName.valueOf(tableName)); Put put = new Put(rowKey.getBytes()); put.addColumn(colFamily.getBytes(),col.getBytes(), val.getBytes()); table.put(put); table.close(); } public static void getData(String tableName,String rowKey,String colFamily, String col)throws IOException{ Table table = connection.getTable(TableName.valueOf(tableName)); Get get = new Get(rowKey.getBytes()); get.addColumn(colFamily.getBytes(),col.getBytes()); Result result = table.get(get); System.out.println(new String(result.getValue(colFamily.getBytes(),col==null?null:col.getBytes()))); table.close(); } }运行程序后,到终端输入:scan ‘student’ 查看一下

三、实验:熟悉常用的HBase 操作: (一)编程实现以下指定功能,并用 Hadoop 提供的 HBase Shell 命令完成相同任务: 列出 HBase 所有的表的相关信息,例如表名; 在终端打印出指定的表的所有记录数据; 向已经创建好的表添加和删除指定的列族或列; 清空指定的表的所有记录数据; 统计表的行数。1.列出 HBase 所有的表的相关信息,例如表名: ■ HBase Shell:List ■ Java Api: /*** 同样是正常的建立 数据库连接,执行操作,然后最后关闭连接* 重点是:HTableDescriptor hTableDescriptors[] = admin.listTables(); 获取到 表格列表,然后遍历*/import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.*; import org.apache.hadoop.hbase.client.*; import java.io.IOException; public class Test_1 { public static Configuration configuration; public static Connection connection; public static Admin admin; /** * 建立连接 */ public static void init() { configuration = HBaseConfiguration.create(); configuration.set("hbase.rootdir", "hdfs://localhost:9000/hbase"); try { connection = ConnectionFactory.createConnection(configuration); admin = connection.getAdmin(); } catch (IOException e) { e.printStackTrace(); } } /** * 关闭连接 */ public static void close() { try { if (admin != null) { admin.close(); } if (null != connection) { connection.close(); } } catch (IOException e) { e.printStackTrace(); } } /** * * 查看已有表,通过方法listTables() * * @throws IOException * */ public static void listTables() throws IOException { init(); HTableDescriptor hTableDescriptors[] = admin.listTables(); for (HTableDescriptor hTableDescriptor : hTableDescriptors) { System.out.println(hTableDescriptor.getNameAsString()); } close(); } public static void main(String[] args) { Test_1 t = new Test_1(); try { System.out.println("以下为Hbase 数据库中所存的表信息"); t.listTables(); } catch (IOException e) { e.printStackTrace(); } } }

2.在终端打印出指定的表的所有记录数据; ■ HBase Shell:scan 'student' ■ Java Api: /*** 同样是正常的建立 数据库连接,执行操作,然后最后关闭连接* 重点是: * Table table = connection.getTable(TableName.valueOf(tableName));获取到表格对象* Scan scan = new Scan(); ResultScanner scanner = table.getScanner(scan); 然后通过Scanner对象,获取到ResultScanner扫描结果对象,遍历输出*/import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.*; import org.apache.hadoop.hbase.client.*; import java.io.IOException; import java.util.Scanner; public class Test_2 { public static Configuration configuration; public static Connection connection; public static Admin admin; // 建立连接 public static void init() { configuration = HBaseConfiguration.create(); configuration.set("hbase.rootdir", "hdfs://localhost:9000/hbase"); try { connection = ConnectionFactory.createConnection(configuration); admin = connection.getAdmin(); } catch (IOException e) { e.printStackTrace(); } } // 关闭连接 public static void close() { try { if (admin != null) { admin.close(); } if (null != connection) { connection.close(); } } catch (IOException e) { e.printStackTrace(); } } /** * * 根据表名查找表信息 * */ public static void getData(String tableName) throws IOException { init(); Table table = connection.getTable(TableName.valueOf(tableName)); Scan scan = new Scan(); ResultScanner scanner = table.getScanner(scan); for (Result result : scanner) { showCell((result)); } close(); } /** * * 格式化输出 * * @param result * */ public static void showCell(Result result) { Cell[] cells = result.rawCells(); for (Cell cell : cells) { System.out.println("RowName(行键):" + new String(CellUtil.cloneRow(cell)) + " "); System.out.println("Timetamp(时间戳):" + cell.getTimestamp() + " "); System.out.println("column Family(列簇):" + new String(CellUtil.cloneFamily(cell)) + " "); System.out.println("column Name(列名):" + new String(CellUtil.cloneQualifier(cell)) + " "); System.out.println("value:(值)" + new String(CellUtil.cloneValue(cell)) + " "); System.out.println(); } } public static void main(String[] args) throws IOException { // TODO Auto-generated method stub Test_2 t = new Test_2(); System.out.println("请输入要查看的表名"); Scanner scan = new Scanner(System.in); String tableName = scan.nextLine(); System.out.println("信息如下:"); t.getData(tableName); } }3,向已经创建好的表添加和删除指定的列族或列: ■ HBase Shell: put 'student','95003','Sname','wangjinxuan' (添加列) put 'student','95003','Sname:nickName','wang' (添加列族) put 'student','95003','Sname:firstName','jinxuan' (添加列族) put的反向操作的delete: delete 'student' ,’95003’,’Sname’ delete 'student' ,’95003’,’Sname:nickName’ deleteall 'student' ,’95003’ (删除整个行记录) ■ Java Api: /** * hbase只关注rowkey,column Family(列族),并没有说在创建表的时候指定cq(列限定修饰符)有多少,这也是hbase列式存储的特点, * 所以在hbase API中是没有提供delete 一个列下的所有数据的 * * 同样是正常的建立 数据库连接,执行操作,然后最后关闭连接 * 1,Table table = connection.getTable(TableName.valueOf(tableName)); 先获取到表 * 2,插入:(① 创建Put对象,② 然后通过方法 addColumn将列、列限定符、值 放到put对象,③ 最后将put对象put到表格) * Put put = new Put(rowKey.getBytes()); * put.addColumn(colFamily.getBytes(), col.getBytes(), val.getBytes()); * table.put(put); * 3,删除: * Table table = connection.getTable(TableName.valueOf(tableName)); 同样首先获取到表 * Delete delete = new Delete(rowKey.getBytes()); //通过传入行键,new一个删除对象 * //删除对象添加要被删除的列或列族 * ① 删除指定列族的所有数据(此情况是列族下无列限定符时的情况):delete.addFamily(colFamily.getBytes()); * ② 删除指定列的数据(此列主要说的是列限定修饰符):delete.addColumn(colFamily.getBytes(), col.getBytes()); * table.delete(delete); //最后就是表格delete掉 delete对象 */ import java.io.IOException; import java.util.Scanner; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.Cell; import org.apache.hadoop.hbase.CellUtil; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.TableName; import org.apache.hadoop.hbase.client.Admin; import org.apache.hadoop.hbase.client.Connection; import org.apache.hadoop.hbase.client.ConnectionFactory; import org.apache.hadoop.hbase.client.Delete; import org.apache.hadoop.hbase.client.Put; import org.apache.hadoop.hbase.client.Result; import org.apache.hadoop.hbase.client.ResultScanner; import org.apache.hadoop.hbase.client.Scan; import org.apache.hadoop.hbase.client.Table; public class Test_3 { public static Configuration configuration; public static Connection connection; public static Admin admin; // 建立连接 public static void init() { configuration = HBaseConfiguration.create(); configuration.set("hbase.rootdir", "hdfs://localhost:9000/hbase"); try { connection = ConnectionFactory.createConnection(configuration); admin = connection.getAdmin(); } catch (IOException e) { e.printStackTrace(); } } // 关闭连接 public static void close() { try { if (admin != null) { admin.close(); } if (null != connection) { connection.close(); } } catch (IOException e) { e.printStackTrace(); } } /** * 向某一行的某一列插入数据 * * @param tableName 表名 * @param rowKey 行键 * @param colFamily 列族名 * @param col 列名(如果其列族下没有子列,此参数可为空) * @param val 值 * @throws IOException */ public static void insertRow(String tableName, String rowKey, String colFamily, String col, String val)throws IOException { init(); Table table = connection.getTable(TableName.valueOf(tableName)); Put put = new Put(rowKey.getBytes()); put.addColumn(colFamily.getBytes(), col.getBytes(), val.getBytes()); table.put(put); table.close(); close(); } /** * 根据表名查找表信息 */ public static void getData(String tableName) throws IOException { init(); Table table = connection.getTable(TableName.valueOf(tableName)); Scan scan = new Scan(); ResultScanner scanner = table.getScanner(scan); for (Result result : scanner) { showCell((result)); } close(); } /** * * 格式化输出 * * @param result * */ public static void showCell(Result result) { Cell[] cells = result.rawCells(); for (Cell cell : cells) { System.out.println("RowName(行键):" + new String(CellUtil.cloneRow(cell)) + " "); System.out.println("Timetamp(时间戳):" + cell.getTimestamp() + " "); System.out.println("column Family(列簇):" + new String(CellUtil.cloneFamily(cell)) + " "); System.out.println("column Name(列名):" + new String(CellUtil.cloneQualifier(cell)) + " "); System.out.println("value:(值)" + new String(CellUtil.cloneValue(cell)) + " "); System.out.println(); } } /** * * 删除数据 * * @param tableName 表名 * * @param rowKey 行键 * * @param colFamily 列族名 * * @param col 列名 * * @throws IOException * */ public static void deleteRow(String tableName, String rowKey, String colFamily, String col) throws IOException { init(); Table table = connection.getTable(TableName.valueOf(tableName)); Delete delete = new Delete(rowKey.getBytes()); if(col == null) { //删除指定列族的所有数据(此情况是列族下无列限定符时的情况) delete.addFamily(colFamily.getBytes()); table.delete(delete); table.close(); }else { //删除指定列的数据(此列主要说的是列限定修饰符) delete.addColumn(colFamily.getBytes(), col.getBytes()); table.delete(delete); table.close(); } close(); } public static void main(String[] args) { Test_3 t = new Test_3(); boolean flag = true; while (flag){ System.out.println("------------向已经创建好的表中添加和删除指定的列簇或列--------------------"); System.out.println(" 请输入您要进行的操作 1- 添加 2-删除 "); Scanner scan = new Scanner(System.in); String choose1 = scan.nextLine(); switch (choose1) { case "1": try { //put 'student','95003','Sname','wangjinxuan' (添加列) //put 'student','95003','Sname:nickName','wang' (添加列族) //put 'student','95003','Sname:firstName','jinxuan' (添加列族) // t.insertRow(tableName, rowKey, colFamily, col, val); t.insertRow("student", "95003", "Sname",null, "wangjingxuan"); t.insertRow("student", "95003", "Sname", "nickName", "wang"); t.insertRow("student", "95003", "Sname", "firstName", "jingxuan"); System.out.println("插入成功:"); t.getData(tableName); } catch (IOException e) { e.getMessage(); } break; case "2": try { System.out.println("----------------------删除前,表的原本信息如下---------------------"); t.getData(tableName); //delete 'student' ,’95003’,’Sname’ //delete 'student' ,’95003’,’Sname:nickName’ // t.deleteRow(tableName, rowKey, colFamily, col); t.deleteRow("student", "95003", "Sname", "firstName"); System.out.println("-----------------------删除成功-----------------------------\n"); System.out.println("---------------------删除后,表的信息如下---------------------"); t.getData(tableName); } catch (IOException e) { e.getMessage(); } break; } System.out.println(" 你要继续操作吗? 是-true 否-false "); flag = scan.nextBoolean(); } System.out.println(" 程序已退出! "); } }4,清空指定的表的所有记录数据: ■ HBase Shell:truncate 'student' ■ Java Api: import java.io.IOException; import java.util.Scanner; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.Cell; import org.apache.hadoop.hbase.CellUtil; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.HColumnDescriptor; import org.apache.hadoop.hbase.HTableDescriptor; import org.apache.hadoop.hbase.TableName; import org.apache.hadoop.hbase.client.Admin; import org.apache.hadoop.hbase.client.Connection; import org.apache.hadoop.hbase.client.ConnectionFactory; import org.apache.hadoop.hbase.client.HBaseAdmin; import org.apache.hadoop.hbase.client.Result; import org.apache.hadoop.hbase.client.ResultScanner; import org.apache.hadoop.hbase.client.Scan; import org.apache.hadoop.hbase.client.Table; import org.apache.hadoop.hbase.util.Bytes; public class Test_4 { public static Configuration configuration; public static Connection connection; public static Admin admin; // 建立连接 public static void init() { configuration = HBaseConfiguration.create(); configuration.set("hbase.rootdir", "hdfs://localhost:9000/hbase"); try { connection = ConnectionFactory.createConnection(configuration); admin = connection.getAdmin(); } catch (IOException e) { e.printStackTrace(); } } // 关闭连接 public static void close() { try { if (admin != null) { admin.close(); } if (null != connection) { connection.close(); } } catch (IOException e) { e.printStackTrace(); } } /** * * 清空制定的表的所有记录数据 * * @param args * * @throws IOException * */ public static void clearRows(String tableName) throws IOException { init(); HBaseAdmin admin1 = new HBaseAdmin(configuration); // 读取了之前表的表名 列簇等信息,然后再进行删除操作。 HTableDescriptor tDescriptor = admin1.getTableDescriptor(Bytes.toBytes(tableName)); // 总思想是先将原表结构保留下来,然后进行删除,再重新依据保存的信息重新创建表。 TableName tablename = TableName.valueOf(tableName); // 删除表 admin.disableTable(tablename); admin.deleteTable(tablename); // 重新建表 admin.createTable(tDescriptor); close(); } /** * * 根据表名查找表信息 * */ public static void getData(String tableName) throws IOException { init(); Table table = connection.getTable(TableName.valueOf(tableName)); Scan scan = new Scan(); ResultScanner scanner = table.getScanner(scan); for (Result result : scanner){ showCell((result)); } close(); } /** * * 格式化输出 * * @param result * */ public static void showCell(Result result) { Cell[] cells = result.rawCells(); for (Cell cell : cells) { System.out.println("RowName(行键):" + new String(CellUtil.cloneRow(cell)) + " "); System.out.println("Timetamp(时间戳):" + cell.getTimestamp() + " "); System.out.println("column Family(列簇):" + new String(CellUtil.cloneFamily(cell)) + " "); System.out.println("column Name(列名):" + new String(CellUtil.cloneQualifier(cell)) + " "); System.out.println("value:(值)" + new String(CellUtil.cloneValue(cell)) + " "); System.out.println(); } } public static void main(String[] args) { Test_4 test_4 = new Test_4(); Scanner scan = new Scanner(System.in); System.out.println("请输入要清空的表名"); String tableName = scan.nextLine(); try { System.out.println("表原来的信息:"); test_4.getData(tableName); test_4.clearRows(tableName); System.out.println("表已清空:"); } catch (IOException e) { e.printStackTrace(); } } }5,统计表的行数: ■ HBase Shell:count 'student' ■ Java Api: import java.io.IOException; import java.util.Scanner; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.TableName; import org.apache.hadoop.hbase.client.Admin; import org.apache.hadoop.hbase.client.Connection; import org.apache.hadoop.hbase.client.ConnectionFactory; import org.apache.hadoop.hbase.client.Result; import org.apache.hadoop.hbase.client.ResultScanner; import org.apache.hadoop.hbase.client.Scan; import org.apache.hadoop.hbase.client.Table; public class Test_5 { public static Configuration configuration; public static Connection connection; public static Admin admin; //建立连接 public static void init() { configuration = HBaseConfiguration.create(); configuration.set("hbase.rootdir", "hdfs://localhost:9000/hbase"); try { connection = ConnectionFactory.createConnection(configuration); admin = connection.getAdmin(); } catch (IOException e) { e.printStackTrace(); } } // 关闭连接 public static void close() { try { if (admin != null) { admin.close(); } if (null != connection) { connection.close(); } } catch (IOException e) { e.printStackTrace(); } } public static void countRows(String tableName) throws IOException{ init(); Table table = connection.getTable(TableName.valueOf(tableName)); Scan scan = new Scan(); ResultScanner scanner = table.getScanner(scan); int num = 0; for (Result result = scanner.next(); result != null; result = scanner.next()){ num++; } System.out.println("行数:" + num); scanner.close(); close(); } public static void main(String[] args) throws IOException { Test_5 test_5 = new Test_5(); Scanner scan = new Scanner(System.in); System.out.println("请输入要统计行数的表名"); String tableName = scan.nextLine(); test_5.countRows(tableName); } }

(二)现有以下关系型数据库中的表和数据,要求将其转换为适合于HBase存储的表并插入数据: 学生表(Student)、课程表(Course)、选课表(SC):同时,请编程完成以下指定功能: create 'Student','S_No','S_Name','S_Sex','S_Age' put 'Student','s001','S_No','2015001' put 'Student','s001','S_Name','Zhangsan' put 'Student','s001','S_Sex','male' put 'Student','s001','S_Age','23' put 'Student','s002','S_No','2015002' put 'Student','s002','S_Name','Mary' put 'Student','s002','S_Sex','female' put 'Student','s002','S_Age','22' put 'Student','s003','S_No','2015003' put 'Student','s003','S_Name','Lisi' put 'Student','s003','S_Sex','male' put 'Student','s003','S_Age','24' ————————————————————————————————————————————————————————————————————————————— create 'Course','C_No','C_Name','C_Credit' put 'Course','c001','C_No','123001' put 'Course','c001','C_Name','Math' put 'Course','c001','C_Credit','2.0' put 'Course','c002','C_No','123002' put 'Course','c002','C_Name','Computer' put 'Course','c002','C_Credit','5.0' put 'Course','c003','C_No','123003' put 'Course','c003','C_Name','English' put 'Course','c003','C_Credit','3.0' ———————————————————————————————————————————————————————————————————————————————— put 'SC','sc001','SC_Sno','2015001' put 'SC','sc001','SC_Cno','123001' put 'SC','sc001','SC_Score','86' put 'SC','sc002','SC_Sno','2015001' put 'SC','sc002','SC_Cno','123003' put 'SC','sc002','SC_Score','69' put 'SC','sc003','SC_Sno','2015002' put 'SC','sc003','SC_Cno','123002' put 'SC','sc003','SC_Score','77' put 'SC','sc004','SC_Sno','2015002' put 'SC','sc004','SC_Cno','123003' put 'SC','sc004','SC_Score','99' put 'SC','sc005','SC_Sno','2015003' put 'SC','sc005','SC_Cno','123001' put 'SC','sc005','SC_Score','98' put 'SC','sc006','SC_Sno','2015003' put 'SC','sc006','SC_Cno','123002' put 'SC','sc006','SC_Score','95'同时,请编程完成以下指定功能: ① createTable(String tableName, String[] fields): 创建表,参数tableName为表的名称,字符串数组fields为存储记录各个域名称的数组。 (域名称即列族名称啦)要求当HBase已经存在名为tableName的表的时候,先删除原有的表,然后再创建新的表。 import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.HColumnDescriptor; import org.apache.hadoop.hbase.HTableDescriptor; import org.apache.hadoop.hbase.TableName; import org.apache.hadoop.hbase.client.Admin; import org.apache.hadoop.hbase.client.Connection; import org.apache.hadoop.hbase.client.ConnectionFactory; import java.io.IOException; public class CreateTable { public static Configuration configuration; public static Connection connection; public static Admin admin; public static void createTable(String tableName, String[] fields) throws IOException { init(); TableName tablename = TableName.valueOf(tableName); if (admin.tableExists(tablename)) { System.out.println("table is exists!"); admin.disableTable(tablename); admin.deleteTable(tablename); } HTableDescriptor hTableDescriptor = new HTableDescriptor(tablename); for (String str : fields) { HColumnDescriptor hColumnDescriptor = new HColumnDescriptor(str); hTableDescriptor.addFamily(hColumnDescriptor); } admin.createTable(hTableDescriptor); close(); } public static void init() { configuration = HBaseConfiguration.create(); configuration.set("hbase.rootdir", "hdfs://localhost:9000/hbase"); try { connection = ConnectionFactory.createConnection(configuration); admin = connection.getAdmin(); } catch (IOException e) { e.printStackTrace(); } } public static void close() { try { if (admin != null) { admin.close(); } if (null != connection) { connection.close(); } } catch (IOException e) { e.printStackTrace(); } } public static void main(String[] args) { String[] fields = {"Score"}; try { createTable("person", fields); } catch (IOException e) { e.printStackTrace(); } } }② addRecord(String tableName, String row, String[] fields, String[] values): 向表tableName、行row(用S_Name表示)和字符串数组files指定的单元格中添加对应的数据values。其中fields中每个元素如果对应的列族下还有相应的列限定符的话,用"columnFamily:column"表示。例如,同时向"Math"、“Computer Science”、"English"三列添加成绩时,字符串数组fields为{“Score:Math”, “Score: Computer Science”, “Score:English”},数组values存储这三门课的成绩。 import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.TableName; import org.apache.hadoop.hbase.client.*; import java.io.IOException; public class AddRecord { public static Configuration configuration; public static Connection connection; public static Admin admin; public static void addRecord(String tableName, String row, String[] fields, String[] values) throws IOException { init(); Table table = connection.getTable(TableName.valueOf(tableName)); for (int i = 0; i != fields.length; i++) { Put put = new Put(row.getBytes()); String[] cols = fields[i].split(":"); put.addColumn(cols[0].getBytes(), cols[1].getBytes(), values[i].getBytes()); table.put(put); } table.close(); close(); } public static void init() { configuration = HBaseConfiguration.create(); configuration.set("hbase.rootdir", "hdfs://localhost:9000/hbase"); try { connection = ConnectionFactory.createConnection(configuration); admin = connection.getAdmin(); } catch (IOException e) { e.printStackTrace(); } } public static void close() { try { if (admin != null) { admin.close(); } if (null != connection) { connection.close(); } } catch (IOException e) { e.printStackTrace(); } } public static void main(String[] args) { String[] fields = {"Score:Math", "Score:Computer Science", "Score:English"}; String[] values = {"99", "80", "100"}; try { addRecord("person", "Score", fields, values); } catch (IOException e) { e.printStackTrace(); } } }③ scanColumn(String tableName, String column): 浏览表tableName某一列的数据,如果某一行记录中该列数据不存在,则返回null。要求当参数column为某一列族名称时,如果底下有若干个列限定符,则要列出每个列限定符代表的列的数据;当参数column为某一列具体名称(例如"Score:Math")时,只需要列出该列的数据。 import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.Cell; import org.apache.hadoop.hbase.CellUtil; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.TableName; import org.apache.hadoop.hbase.client.*; import org.apache.hadoop.hbase.util.Bytes; import java.io.IOException; public class ScanColumn { public static Configuration configuration; public static Connection connection; public static Admin admin; public static void scanColumn(String tableName, String column) throws IOException { init(); Table table = connection.getTable(TableName.valueOf(tableName)); Scan scan = new Scan(); scan.addFamily(Bytes.toBytes(column)); ResultScanner scanner = table.getScanner(scan); for (Result result = scanner.next(); result != null; result = scanner.next()) { showCell(result); } table.close(); close(); } public static void showCell(Result result) { Cell[] cells = result.rawCells(); for (Cell cell : cells) { System.out.println("RowName:" + new String(CellUtil.cloneRow(cell)) + " "); System.out.println("Timetamp:" + cell.getTimestamp() + " "); System.out.println("column Family:" + new String(CellUtil.cloneFamily(cell)) + " "); System.out.println("row Name:" + new String(CellUtil.cloneQualifier(cell)) + " "); System.out.println("value:" + new String(CellUtil.cloneValue(cell)) + " "); } } public static void init() { configuration = HBaseConfiguration.create(); configuration.set("hbase.rootdir", "hdfs://localhost:9000/hbase"); try { connection = ConnectionFactory.createConnection(configuration); admin = connection.getAdmin(); } catch (IOException e) { e.printStackTrace(); } } // 关闭连接 public static void close() { try { if (admin != null) { admin.close(); } if (null != connection) { connection.close(); } } catch (IOException e) { e.printStackTrace(); } } public static void main(String[] args) { try { scanColumn("person", "Score"); } catch (IOException e) { e.printStackTrace(); } } }④ modifyData(String tableName, String row, String column): 修改表tableName,行row(可以用学生姓名S_Name表示),列column指定的单元格的数据。 import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.Cell; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.TableName; import org.apache.hadoop.hbase.client.*; import java.io.IOException; public class ModifyData { public static long ts; public static Configuration configuration; public static Connection connection; public static Admin admin; public static void modifyData(String tableName, String row, String column, String val) throws IOException { init(); Table table = connection.getTable(TableName.valueOf(tableName)); Put put = new Put(row.getBytes()); Scan scan = new Scan(); ResultScanner resultScanner = table.getScanner(scan); for (Result r : resultScanner) { for (Cell cell : r.getColumnCells(row.getBytes(), column.getBytes())) { ts = cell.getTimestamp(); } } put.addColumn(row.getBytes(), column.getBytes(), ts, val.getBytes()); table.put(put); table.close(); close(); } public static void init() { configuration = HBaseConfiguration.create(); configuration.set("hbase.rootdir", "hdfs://localhost:9000/hbase"); try { connection = ConnectionFactory.createConnection(configuration); admin = connection.getAdmin(); } catch (IOException e) { e.printStackTrace(); } } public static void close() { try { if (admin != null) { admin.close(); } if (null != connection) { connection.close(); } } catch (IOException e) { e.printStackTrace(); } } public static void main(String[] args) { try { modifyData("person", "Score", "Math", "100"); } catch (IOException e) { e.printStackTrace(); } } }⑤ deleteRow(String tableName, String row): 删除表tableName中row指定的行的记录。 import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.Cell; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.TableName; import org.apache.hadoop.hbase.client.*; import java.io.IOException; public class ModifyData { public static long ts; public static Configuration configuration; public static Connection connection; public static Admin admin; public static void modifyData(String tableName, String row, String column, String val) throws IOException { init(); Table table = connection.getTable(TableName.valueOf(tableName)); Put put = new Put(row.getBytes()); Scan scan = new Scan(); ResultScanner resultScanner = table.getScanner(scan); for (Result r : resultScanner) { for (Cell cell : r.getColumnCells(row.getBytes(), column.getBytes())) { ts = cell.getTimestamp(); } } put.addColumn(row.getBytes(), column.getBytes(), ts, val.getBytes()); table.put(put); table.close(); close(); } public static void init() { configuration = HBaseConfiguration.create(); configuration.set("hbase.rootdir", "hdfs://localhost:9000/hbase"); try { connection = ConnectionFactory.createConnection(configuration); admin = connection.getAdmin(); } catch (IOException e) { e.printStackTrace(); } } public static void close() { try { if (admin != null) { admin.close(); } if (null != connection) { connection.close(); } } catch (IOException e) { e.printStackTrace(); } } public static void main(String[] args) { try { modifyData("person", "Score", "Math", "100"); } catch (IOException e) { e.printStackTrace(); } } }

参考: 《HBase2.2.2安装和编程实践指南_厦大数据库实验室博客 (xmu.edu.cn)》 《实验3 熟悉常用的 HBase 操作》https://blog.csdn.net/qq_38648558/article/details/83033050 《实验3_熟悉常用的HBase操作》https://blog.csdn.net/qq_50596778/article/details/120552574

本文来自博客园,作者:一乐乐,转载请注明原文链接:https://www.cnblogs.com/shan333/p/15388633.html |

【本文地址】