机器人标定总结 |

您所在的位置:网站首页 › 机器人运动范围是什么意思 › 机器人标定总结 |

机器人标定总结

|

1 引言

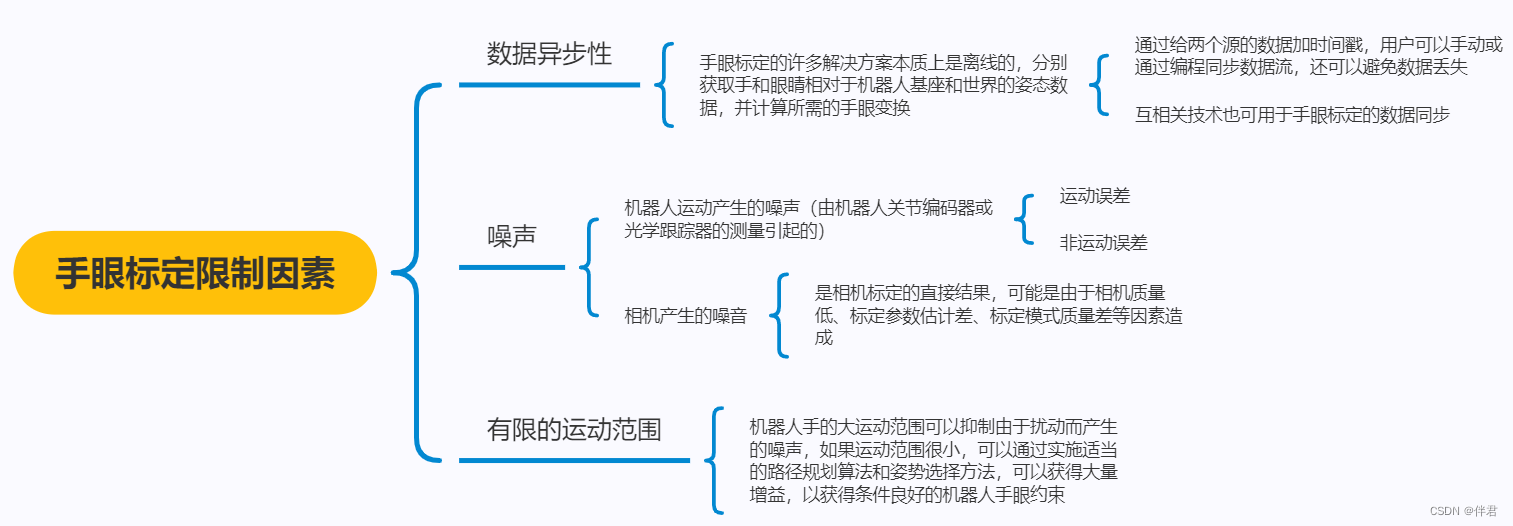

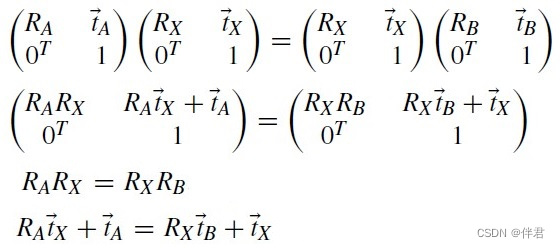

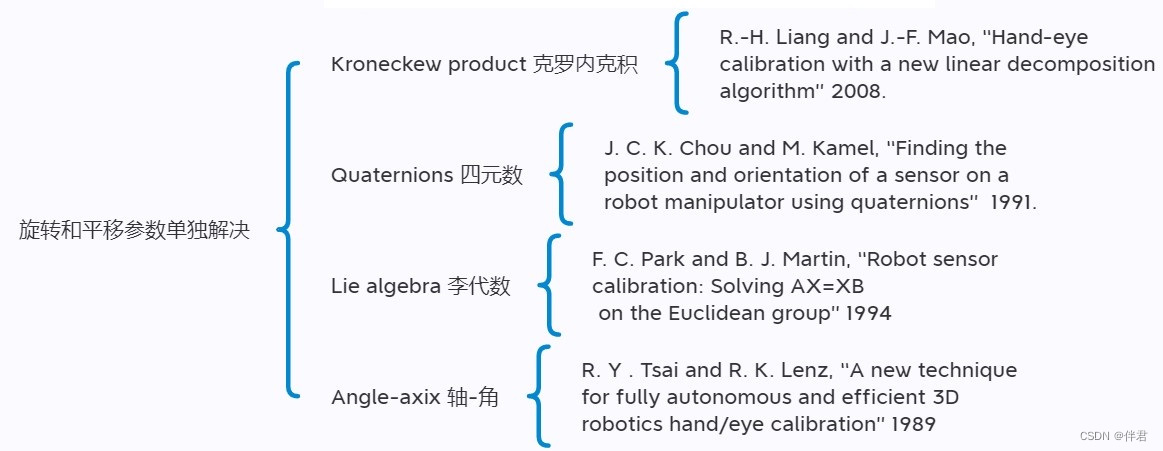

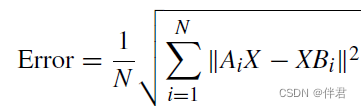

工业机器人虽然重复定位精度很高,但由于绝对定位精度很低限制了工业机器人的应用,因此提高绝对定位精度能扩展工业机器人的应用范围。机器人可以将传感器安装在固定位置,具有固定的位置(eye-in-hand),也可以将传感器安装在机器人的手上,以便通过改变摄像头的视角来获取新图像(即eye-to-hand)。为了使机器人能够准确估计零件相对于其自身底座的三维位置和方向,需要知道机器手臂和其自身底座、相机和手臂以及相机与工件的相对位置和方向。这三项任务需要校准机器人、传感器和机器手臂对传感器(手眼)。校准机器人主要是对机器人运动学参数进行标定,基于机器人误差模型获取机器人运动学参数误差,将误差补偿到原有名义参数上达到机器人标定的目的。校准传感器对于搭载的传感器为相机而言就是获取相机内参数以及标定物相对于相机坐标系的转换矩阵。校准机器手臂与传感器即我们所谓的手眼关系标定,主要是确定机器人末端和传感器之间的关系,这也是本篇文章的重点。 2 基础知识 在学习机器人标定之前,首先要了解其对应的坐标系,分别对应下图的机器人基座坐标系、机器臂末端坐标系、相机坐标系以及世界坐标系。 手眼标定方法主要分为三大类,分别有上面提到的AX=XB齐次方程求解法、最小重投影误差法、人工神经网络(ANN)法。分别对应下图: 基于齐次方程求解方式可以旋转和平移参数是否同时解算分为两大类:旋转和平移参数单独解决以及旋转和平移参数同时解决。 旋转和平移参数单独解决 旋转和平移参数单独解决的方法原理具体如下:齐次方程AX = XB,展开如下 与齐次变换方程相比,该技术的主要优点是,它直接获取校准对象的图像,而无需对相机进行明确的姿态估计。 3.3 人工神经网络:在手眼标定中使用ANN可以被认为是找到机器人基座的手坐标和校准对象的相应图像坐标之间的映射,该问题可以表示为A=fn(x)。优点:可以在不了解相机参数或姿态估计的情况下使用,因为其具有很强的泛化变量之间非线性关系的能力,这也使得它适合处理噪声。缺点:提供的解通常无法解释,性能取决于所使用的网络结构,也要防止过拟合现象。 4 标定目标前面一直介绍的都是以普通摄像机作为传感器,它们的标定目标一般有三类,基于棋盘格、基于圆形栅格、其他(charuCo),具体形状如下图所示:

这里有一些基于AX=XB齐次方程求解方法的源码,只需要在使用的时候调用这些函数即可。 // This file is part of OpenCV project. // It is subject to the license terms in the LICENSE file found in the top-level directory // of this distribution and at http://opencv.org/license.html. #include "opencv2/core.hpp" #include "opencv2/imgproc.hpp" #include "opencv2/highgui.hpp" #include "opencv2/calib3d.hpp" #include "iostream" using namespace std; namespace cv { static Mat homogeneousInverse(const Mat& T) { CV_Assert(T.rows == 4 && T.cols == 4); Mat R = T(Rect(0, 0, 3, 3)); Mat t = T(Rect(3, 0, 1, 3)); Mat Rt = R.t(); Mat tinv = -Rt * t; Mat Tinv = Mat::eye(4, 4, T.type()); Rt.copyTo(Tinv(Rect(0, 0, 3, 3))); tinv.copyTo(Tinv(Rect(3, 0, 1, 3))); return Tinv; } // q = rot2quatMinimal(R) // // R - 3x3 rotation matrix, or 4x4 homogeneous matrix // q - 3x1 unit quaternion // q = sin(theta/2) * v // theta - rotation angle // v - unit rotation axis, |v| = 1 // Reference: http://www.euclideanspace.com/maths/geometry/rotations/conversions/matrixToQuaternion/ static Mat rot2quatMinimal(const Mat& R) { CV_Assert(R.type() == CV_64FC1 && R.rows >= 3 && R.cols >= 3); double m00 = R.at(0,0), m01 = R.at(0,1), m02 = R.at(0,2); double m10 = R.at(1,0), m11 = R.at(1,1), m12 = R.at(1,2); double m20 = R.at(2,0), m21 = R.at(2,1), m22 = R.at(2,2); double trace = m00 + m11 + m22; double qx, qy, qz; if (trace > 0) { double S = sqrt(trace + 1.0) * 2; // S=4*qw qx = (m21 - m12) / S; qy = (m02 - m20) / S; qz = (m10 - m01) / S; } else if (m00 > m11 && m00 > m22) { double S = sqrt(1.0 + m00 - m11 - m22) * 2; // S=4*qx qx = 0.25 * S; qy = (m01 + m10) / S; qz = (m02 + m20) / S; } else if (m11 > m22) { double S = sqrt(1.0 + m11 - m00 - m22) * 2; // S=4*qy qx = (m01 + m10) / S; qy = 0.25 * S; qz = (m12 + m21) / S; } else { double S = sqrt(1.0 + m22 - m00 - m11) * 2; // S=4*qz qx = (m02 + m20) / S; qy = (m12 + m21) / S; qz = 0.25 * S; } return (Mat_(3,1) CV_Assert(q.type() == CV_64FC1 && q.rows == 3 && q.cols == 1); Mat p = q.t()*q; double w = sqrt(1 - p.at(0,0)); Mat diag_p = Mat::eye(3,3,CV_64FC1)*p.at(0,0); return 2*q*q.t() + 2*w*skew(q) + Mat::eye(3,3,CV_64FC1) - 2*diag_p; } // q = rot2quat(R) // // q - 4x1 unit quaternion // R - 3x3 rotation matrix // Reference: http://www.euclideanspace.com/maths/geometry/rotations/conversions/matrixToQuaternion/ static Mat rot2quat(const Mat& R) { CV_Assert(R.type() == CV_64FC1 && R.rows >= 3 && R.cols >= 3); double m00 = R.at(0,0), m01 = R.at(0,1), m02 = R.at(0,2); double m10 = R.at(1,0), m11 = R.at(1,1), m12 = R.at(1,2); double m20 = R.at(2,0), m21 = R.at(2,1), m22 = R.at(2,2); double trace = m00 + m11 + m22; double qw, qx, qy, qz; if (trace > 0) { double S = sqrt(trace + 1.0) * 2; // S=4*qw qw = 0.25 * S; qx = (m21 - m12) / S; qy = (m02 - m20) / S; qz = (m10 - m01) / S; } else if (m00 > m11 && m00 > m22) { double S = sqrt(1.0 + m00 - m11 - m22) * 2; // S=4*qx qw = (m21 - m12) / S; qx = 0.25 * S; qy = (m01 + m10) / S; qz = (m02 + m20) / S; } else if (m11 > m22) { double S = sqrt(1.0 + m11 - m00 - m22) * 2; // S=4*qy qw = (m02 - m20) / S; qx = (m01 + m10) / S; qy = 0.25 * S; qz = (m12 + m21) / S; } else { double S = sqrt(1.0 + m22 - m00 - m11) * 2; // S=4*qz qw = (m10 - m01) / S; qx = (m02 + m20) / S; qy = (m12 + m21) / S; qz = 0.25 * S; } return (Mat_(4,1) CV_Assert(A.channels() == 1 && B.channels() == 1); Mat1d Ad, Bd; A.convertTo(Ad, CV_64F); B.convertTo(Bd, CV_64F); Mat1d Kd(Ad.rows * Bd.rows, Ad.cols * Bd.cols, 0.0); for (int ra = 0; ra Kd(Range(ra*Bd.rows, (ra + 1)*Bd.rows), Range(ca*Bd.cols, (ca + 1)*Bd.cols)) = Bd.mul(Ad(ra, ca)); } } Mat K; Kd.convertTo(K, A.type()); return K; } // quaternion multiplication static Mat qmult(const Mat& s, const Mat& t) { CV_Assert(s.type() == CV_64FC1 && t.type() == CV_64FC1); CV_Assert(s.rows == 4 && s.cols == 1); CV_Assert(t.rows == 4 && t.cols == 1); double s0 = s.at(0,0); double s1 = s.at(1,0); double s2 = s.at(2,0); double s3 = s.at(3,0); double t0 = t.at(0,0); double t1 = t.at(1,0); double t2 = t.at(2,0); double t3 = t.at(3,0); Mat q(4, 1, CV_64FC1); q.at(0,0) = s0*t0 - s1*t1 - s2*t2 - s3*t3; q.at(1,0) = s0*t1 + s1*t0 + s2*t3 - s3*t2; q.at(2,0) = s0*t2 - s1*t3 + s2*t0 + s3*t1; q.at(3,0) = s0*t3 + s1*t2 - s2*t1 + s3*t0; return q; } // dq = homogeneous2dualQuaternion(H) // // H - 4x4 homogeneous transformation: [R | t; 0 0 0 | 1] // dq - 8x1 dual quaternion: static Mat homogeneous2dualQuaternion(const Mat& H) { CV_Assert(H.type() == CV_64FC1 && H.rows == 4 && H.cols == 4); Mat dualq(8, 1, CV_64FC1); Mat R = H(Rect(0, 0, 3, 3)); Mat t = H(Rect(3, 0, 1, 3)); Mat q = rot2quat(R); Mat qt = Mat::zeros(4, 1, CV_64FC1); t.copyTo(qt(Rect(0, 1, 1, 3))); Mat qprime = 0.5 * qmult(qt, q); q.copyTo(dualq(Rect(0, 0, 1, 4))); qprime.copyTo(dualq(Rect(0, 4, 1, 4))); return dualq; } // H = dualQuaternion2homogeneous(dq) // // H - 4x4 homogeneous transformation: [R | t; 0 0 0 | 1] // dq - 8x1 dual quaternion: static Mat dualQuaternion2homogeneous(const Mat& dualq) { CV_Assert(dualq.type() == CV_64FC1 && dualq.rows == 8 && dualq.cols == 1); Mat q = dualq(Rect(0, 0, 1, 4)); Mat qprime = dualq(Rect(0, 4, 1, 4)); Mat R = quat2rot(q); q.at(1,0) = -q.at(1,0); q.at(2,0) = -q.at(2,0); q.at(3,0) = -q.at(3,0); Mat qt = 2*qmult(qprime, q); Mat t = qt(Rect(0, 1, 1, 3)); Mat H = Mat::eye(4, 4, CV_64FC1); R.copyTo(H(Rect(0, 0, 3, 3))); t.copyTo(H(Rect(3, 0, 1, 3))); return H; } //Reference: //R. Y. Tsai and R. K. Lenz, "A new technique for fully autonomous and efficient 3D robotics hand/eye calibration." //In IEEE Transactions on Robotics and Automation, vol. 5, no. 3, pp. 345-358, June 1989. //C++ code converted from Zoran Lazarevic's Matlab code: //http://lazax.com/www.cs.columbia.edu/~laza/html/Stewart/matlab/handEye.m static void calibrateHandEyeTsai(const std::vector& Hg, const std::vector& Hc, Mat& R_cam2gripper, Mat& t_cam2gripper) { //Number of unique camera position pairs int K = static_cast((Hg.size()*Hg.size() - Hg.size()) / 2.0); //Will store: skew(Pgij+Pcij) Mat A(3*K, 3, CV_64FC1); //Will store: Pcij - Pgij Mat B(3*K, 1, CV_64FC1); // cout //Defines coordinate transformation from Gi to Gj //Hgi is from Gi (gripper) to RW (robot base) //Hgj is from Gj (gripper) to RW (robot base) // cout Mat M = Mat::zeros(3, 3, CV_64FC1); for (size_t i = 0; i Mat Hgij = homogeneousInverse(Hg[j]) * Hg[i]; Mat Hcij = Hc[j] * homogeneousInverse(Hc[i]); Mat Rgij = Hgij(Rect(0, 0, 3, 3)); Mat Rcij = Hcij(Rect(0, 0, 3, 3)); Mat a, b; Rodrigues(Rgij, a); Rodrigues(Rcij, b); M += b * a.t(); } } Mat eigenvalues, eigenvectors; eigen(M.t()*M, eigenvalues, eigenvectors); Mat v = Mat::zeros(3, 3, CV_64FC1); for (int i = 0; i for (size_t j = i+1; j Mat A = Mat::zeros(4, 4, CV_64FC1); for (size_t i = 0; i Mat Hgij = homogeneousInverse(Hg[j]) * Hg[i]; Mat Hcij = Hc[j] * homogeneousInverse(Hc[i]); Mat Rgij = Hgij(Rect(0, 0, 3, 3)); Mat Rcij = Hcij(Rect(0, 0, 3, 3)); Mat qgij = rot2quat(Rgij); double r0 = qgij.at(0,0); double rx = qgij.at(1,0); double ry = qgij.at(2,0); double rz = qgij.at(3,0); // Q(r) Appendix A Matx44d Qvi(r0, -rx, -ry, -rz, rx, r0, -rz, ry, ry, rz, r0, -rx, rz, -ry, rx, r0); Mat qcij = rot2quat(Rcij); r0 = qcij.at(0,0); rx = qcij.at(1,0); ry = qcij.at(2,0); rz = qcij.at(3,0); // W(r) Appendix A Matx44d Wvi(r0, -rx, -ry, -rz, rx, r0, rz, -ry, ry, -rz, r0, rx, rz, ry, -rx, r0); // Ai = (Q(vi') - W(vi))^T (Q(vi') - W(vi)) A += (Qvi - Wvi).t() * (Qvi - Wvi); } } Mat eigenvalues, eigenvectors; eigen(A, eigenvalues, eigenvectors); Mat R = quat2rot(eigenvectors.row(3).t()); R_cam2gripper = R; int K = static_cast((Hg.size()*Hg.size() - Hg.size()) / 2.0); Mat C(3*K, 3, CV_64FC1); Mat d(3*K, 1, CV_64FC1); Mat I3 = Mat::eye(3, 3, CV_64FC1); int idx = 0; for (size_t i = 0; i Mat Hgij = homogeneousInverse(Hg[j]) * Hg[i]; Mat Hcij = Hc[j] * homogeneousInverse(Hc[i]); Mat Rgij = Hgij(Rect(0, 0, 3, 3)); Mat tgij = Hgij(Rect(3, 0, 1, 3)); Mat tcij = Hcij(Rect(3, 0, 1, 3)); Mat I_tgij = I3 - Rgij; I_tgij.copyTo(C(Rect(0, 3*idx, 3, 3))); Mat A_RB = tgij - R*tcij; A_RB.copyTo(d(Rect(0, 3*idx, 1, 3))); } } Mat t; solve(C, d, t, DECOMP_SVD); t_cam2gripper = t; } static Mat_ normalizeRotation(const Mat_& R_) { // Make R unit determinant Mat_ R = R_.clone(); double det = determinant(R); if (std::fabs(det) Matx33d diag(1.0, 0.0, 0.0, 0.0, 1.0, 0.0, 0.0, 0.0, -1.0); R = u*diag*vt; } return R; } //Reference: //N. Andreff, R. Horaud, B. Espiau, "On-line Hand-Eye Calibration." //In Second International Conference on 3-D Digital Imaging and Modeling (3DIM'99), pages 430-436, 1999. //Matlab code: http://math.loyola.edu/~mili/Calibration/ static void calibrateHandEyeAndreff(const std::vector& Hg, const std::vector& Hc, Mat& R_cam2gripper, Mat& t_cam2gripper) { int K = static_cast((Hg.size()*Hg.size() - Hg.size()) / 2.0); Mat A(12*K, 12, CV_64FC1); Mat B(12*K, 1, CV_64FC1); Mat I9 = Mat::eye(9, 9, CV_64FC1); Mat I3 = Mat::eye(3, 3, CV_64FC1); Mat O9x3 = Mat::zeros(9, 3, CV_64FC1); Mat O9x1 = Mat::zeros(9, 1, CV_64FC1); int idx = 0; for (size_t i = 0; i Mat Hgij = homogeneousInverse(Hg[j]) * Hg[i]; Mat Hcij = Hc[j] * homogeneousInverse(Hc[i]); Mat Rgij = Hgij(Rect(0, 0, 3, 3)); Mat Rcij = Hcij(Rect(0, 0, 3, 3)); Mat tgij = Hgij(Rect(3, 0, 1, 3)); Mat tcij = Hcij(Rect(3, 0, 1, 3)); //Eq 10 Mat a00 = I9 - kron(Rgij, Rcij); Mat a01 = O9x3; Mat a10 = kron(I3, tcij.t()); Mat a11 = I3 - Rgij; a00.copyTo(A(Rect(0, idx*12, 9, 9))); a01.copyTo(A(Rect(9, idx*12, 3, 9))); a10.copyTo(A(Rect(0, idx*12 + 9, 9, 3))); a11.copyTo(A(Rect(9, idx*12 + 9, 3, 3))); O9x1.copyTo(B(Rect(0, idx*12, 1, 9))); tgij.copyTo(B(Rect(0, idx*12 + 9, 1, 3))); } } Mat X; solve(A, B, X, DECOMP_SVD); Mat R = X(Rect(0, 0, 1, 9)); int newSize[] = {3, 3}; R = R.reshape(1, 2, newSize); //Eq 15 R_cam2gripper = normalizeRotation(R); t_cam2gripper = X(Rect(0, 9, 1, 3)); } //Reference: //K. Daniilidis, "Hand-Eye Calibration Using Dual Quaternions." //In The International Journal of Robotics Research,18(3): 286-298, 1998. //Matlab code: http://math.loyola.edu/~mili/Calibration/ static void calibrateHandEyeDaniilidis(const std::vector& Hg, const std::vector& Hc, Mat& R_cam2gripper, Mat& t_cam2gripper) { int K = static_cast((Hg.size()*Hg.size() - Hg.size()) / 2.0); Mat T = Mat::zeros(6*K, 8, CV_64FC1); int idx = 0; for (size_t i = 0; i Mat Hgij = homogeneousInverse(Hg[j]) * Hg[i]; Mat Hcij = Hc[j] * homogeneousInverse(Hc[i]); Mat dualqa = homogeneous2dualQuaternion(Hgij); Mat dualqb = homogeneous2dualQuaternion(Hcij); Mat a = dualqa(Rect(0, 1, 1, 3)); Mat b = dualqb(Rect(0, 1, 1, 3)); Mat aprime = dualqa(Rect(0, 5, 1, 3)); Mat bprime = dualqb(Rect(0, 5, 1, 3)); //Eq 31 Mat s00 = a - b; Mat s01 = skew(a + b); Mat s10 = aprime - bprime; Mat s11 = skew(aprime + bprime); Mat s12 = a - b; Mat s13 = skew(a + b); s00.copyTo(T(Rect(0, idx*6, 1, 3))); s01.copyTo(T(Rect(1, idx*6, 3, 3))); s10.copyTo(T(Rect(0, idx*6 + 3, 1, 3))); s11.copyTo(T(Rect(1, idx*6 + 3, 3, 3))); s12.copyTo(T(Rect(4, idx*6 + 3, 1, 3))); s13.copyTo(T(Rect(5, idx*6 + 3, 3, 3))); } } Mat w, u, vt; SVDecomp(T, w, u, vt); Mat v = vt.t(); Mat u1 = v(Rect(6, 0, 1, 4)); Mat v1 = v(Rect(6, 4, 1, 4)); Mat u2 = v(Rect(7, 0, 1, 4)); Mat v2 = v(Rect(7, 4, 1, 4)); //Solves Eq 34, Eq 35 Mat ma = u1.t()*v1; Mat mb = u1.t()*v2 + u2.t()*v1; Mat mc = u2.t()*v2; double a = ma.at(0,0); double b = mb.at(0,0); double c = mc.at(0,0); double s1 = (-b + sqrt(b*b - 4*a*c)) / (2*a); double s2 = (-b - sqrt(b*b - 4*a*c)) / (2*a); Mat sol1 = s1*s1*u1.t()*u1 + 2*s1*u1.t()*u2 + u2.t()*u2; Mat sol2 = s2*s2*u1.t()*u1 + 2*s2*u1.t()*u2 + u2.t()*u2; double s, val; if (sol1.at(0,0) > sol2.at(0,0)) { s = s1; val = sol1.at(0,0); } else { s = s2; val = sol2.at(0,0); } double lambda2 = sqrt(1.0 / val); double lambda1 = s * lambda2; Mat dualq = lambda1 * v(Rect(6, 0, 1, 8)) + lambda2*v(Rect(7, 0, 1, 8)); Mat X = dualQuaternion2homogeneous(dualq); Mat R = X(Rect(0, 0, 3, 3)); Mat t = X(Rect(3, 0, 1, 3)); R_cam2gripper = R; t_cam2gripper = t; } void calibrateHandEye(InputArrayOfArrays R_gripper2base, InputArrayOfArrays t_gripper2base, InputArrayOfArrays R_target2cam, InputArrayOfArrays t_target2cam, OutputArray R_cam2gripper, OutputArray t_cam2gripper, HandEyeCalibrationMethod method) { CV_Assert(R_gripper2base.isMatVector() && t_gripper2base.isMatVector() && R_target2cam.isMatVector() && t_target2cam.isMatVector()); std::cout Mat m = Mat::eye(4, 4, CV_64FC1); Mat R = m(Rect(0, 0, 3, 3)); if(R_target2cam_[i].size() == Size(3, 3)) R_target2cam_[i].convertTo(R, CV_64F); else Rodrigues(R_target2cam_[i], R); Mat t = m(Rect(3, 0, 1, 3)); t_target2cam_[i].convertTo(t, CV_64F); Hc.push_back(m); } //Hc target-camera RMat //Hg base-hand Rmat //Rcg R Result (单位矩阵) //Tcg T Result (0,0,0) Mat Rcg = Mat::eye(3, 3, CV_64FC1); Mat Tcg = Mat::zeros(3, 1, CV_64FC1); switch (method) { case CALIB_HAND_EYE_TSAI: calibrateHandEyeTsai(Hg, Hc, Rcg, Tcg); break; case CALIB_HAND_EYE_PARK: calibrateHandEyePark(Hg, Hc, Rcg, Tcg); break; case CALIB_HAND_EYE_HORAUD: calibrateHandEyeHoraud(Hg, Hc, Rcg, Tcg); break; case CALIB_HAND_EYE_ANDREFF: calibrateHandEyeAndreff(Hg, Hc, Rcg, Tcg); break; case CALIB_HAND_EYE_DANIILIDIS: calibrateHandEyeDaniilidis(Hg, Hc, Rcg, Tcg); break; default: break; } Rcg.copyTo(R_cam2gripper); Tcg.copyTo(t_cam2gripper); } //Reference: //M. Shah, "Solving the robot-world/hand-eye calibration problem using the kronecker product" //Journal of Mechanisms and Robotics, vol. 5, p. 031007, 2013. //Matlab code: http://math.loyola.edu/~mili/Calibration/ static void calibrateRobotWorldHandEyeShah(const std::vector& cRw, const std::vector& ctw, const std::vector& gRb, const std::vector& gtb, Matx33d& wRb, Matx31d& wtb, Matx33d& cRg, Matx31d& ctg) { Mat_ T = Mat_::zeros(9, 9); for (size_t i = 0; i for (int j = 0; j Mat cRw_ = -cRw[i]; cRw_.copyTo(A(Range(i*3, (i+1)*3), Range(0,3))); I3.copyTo(A(Range(i*3, (i+1)*3), Range(3,6))); Mat ctw_ = ctw[i] - kron(gtb[i].t(), I3) * Z; ctw_.copyTo(b(Range(i*3, (i+1)*3), Range::all())); } Mat_ t; solve(A, b, t, DECOMP_SVD); for (int i = 0; i const int n = static_cast(cRw.size()); Mat_ A = Mat_::zeros(12*n, 24); Mat_ b = Mat_::zeros(12*n, 1); Mat_ I3 = Mat_::eye(3,3); for (int i = 0; i CV_Assert(R_base2gripper.isMatVector() && t_base2gripper.isMatVector() && R_world2cam.isMatVector() && t_world2cam.isMatVector()); std::vector R_base2gripper_tmp, t_base2gripper_tmp; R_base2gripper.getMatVector(R_base2gripper_tmp); t_base2gripper.getMatVector(t_base2gripper_tmp); std::vector R_world2cam_tmp, t_world2cam_tmp; R_world2cam.getMatVector(R_world2cam_tmp); t_world2cam.getMatVector(t_world2cam_tmp); CV_Assert(R_base2gripper_tmp.size() == t_base2gripper_tmp.size() && R_world2cam_tmp.size() == t_world2cam_tmp.size() && R_base2gripper_tmp.size() == R_world2cam_tmp.size()); CV_Check(R_base2gripper_tmp.size(), R_base2gripper_tmp.size() >= 3, "At least 3 measurements are needed"); // Convert to double std::vector R_base2gripper_, t_base2gripper_; std::vector R_world2cam_, t_world2cam_; R_base2gripper_.reserve(R_base2gripper_tmp.size()); t_base2gripper_.reserve(R_base2gripper_tmp.size()); R_world2cam_.reserve(R_world2cam_tmp.size()); t_world2cam_.reserve(R_base2gripper_tmp.size()); // Convert to rotation matrix if needed for (size_t i = 0; i Mat rot = R_base2gripper_tmp[i]; Mat R(3, 3, CV_64FC1); if (rot.size() == Size(3,3)) { rot.convertTo(R, CV_64F); R_base2gripper_.push_back(R); } else { Rodrigues(rot, R); R_base2gripper_.push_back(R); } Mat tvec = t_base2gripper_tmp[i]; Mat t; tvec.convertTo(t, CV_64F); t_base2gripper_.push_back(t); } { Mat rot = R_world2cam_tmp[i]; Mat R(3, 3, CV_64FC1); if (rot.size() == Size(3,3)) { rot.convertTo(R, CV_64F); R_world2cam_.push_back(R); } else { Rodrigues(rot, R); R_world2cam_.push_back(R); } Mat tvec = t_world2cam_tmp[i]; Mat t; tvec.convertTo(t, CV_64F); t_world2cam_.push_back(t); } } CV_Assert(R_world2cam_.size() == t_world2cam_.size() && R_base2gripper_.size() == t_base2gripper_.size() && R_world2cam_.size() == R_base2gripper_.size()); Matx33d wRb, cRg; Matx31d wtb, ctg; switch (method) { case CALIB_ROBOT_WORLD_HAND_EYE_SHAH: calibrateRobotWorldHandEyeShah(R_world2cam_, t_world2cam_, R_base2gripper_, t_base2gripper_, wRb, wtb, cRg, ctg); break; case CALIB_ROBOT_WORLD_HAND_EYE_LI: calibrateRobotWorldHandEyeLi(R_world2cam_, t_world2cam_, R_base2gripper_, t_base2gripper_, wRb, wtb, cRg, ctg); break; } Mat(wRb).copyTo(R_base2world); Mat(wtb).copyTo(t_base2world); Mat(cRg).copyTo(R_gripper2cam); Mat(ctg).copyTo(t_gripper2cam); } } |

【本文地址】

今日新闻 |

点击排行 |

|

推荐新闻 |

图片新闻 |

|

专题文章 |

下图所示是一种常见的机器人视觉系统,由机器人和固定在机械臂末端的相机组成(eye-in-hand),记录了一组机器人的相对运动,并由此引出了手眼标定方程:AX = XB。式中,A表示相机两次运动之间的相对位姿,B表示机器臂末端两次运动之间的相对位姿;X表示相机到到末端执行器的相对位姿,即手眼关系矩阵。且:A = A2A-1 ; B = B2B-1 ;式中,A1和A2表示相机两次运动中相对于目标物体的位姿矩阵;B1和B2表示机械臂末端两次运动中基座相对末端的位姿矩阵。

下图所示是一种常见的机器人视觉系统,由机器人和固定在机械臂末端的相机组成(eye-in-hand),记录了一组机器人的相对运动,并由此引出了手眼标定方程:AX = XB。式中,A表示相机两次运动之间的相对位姿,B表示机器臂末端两次运动之间的相对位姿;X表示相机到到末端执行器的相对位姿,即手眼关系矩阵。且:A = A2A-1 ; B = B2B-1 ;式中,A1和A2表示相机两次运动中相对于目标物体的位姿矩阵;B1和B2表示机械臂末端两次运动中基座相对末端的位姿矩阵。

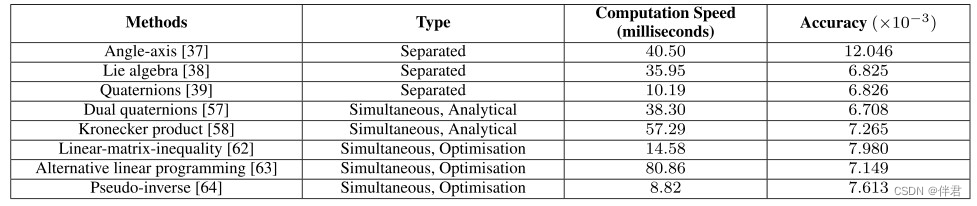

先来分别讲述一下三种方法的优缺点(计算时间和精度仅供参考):

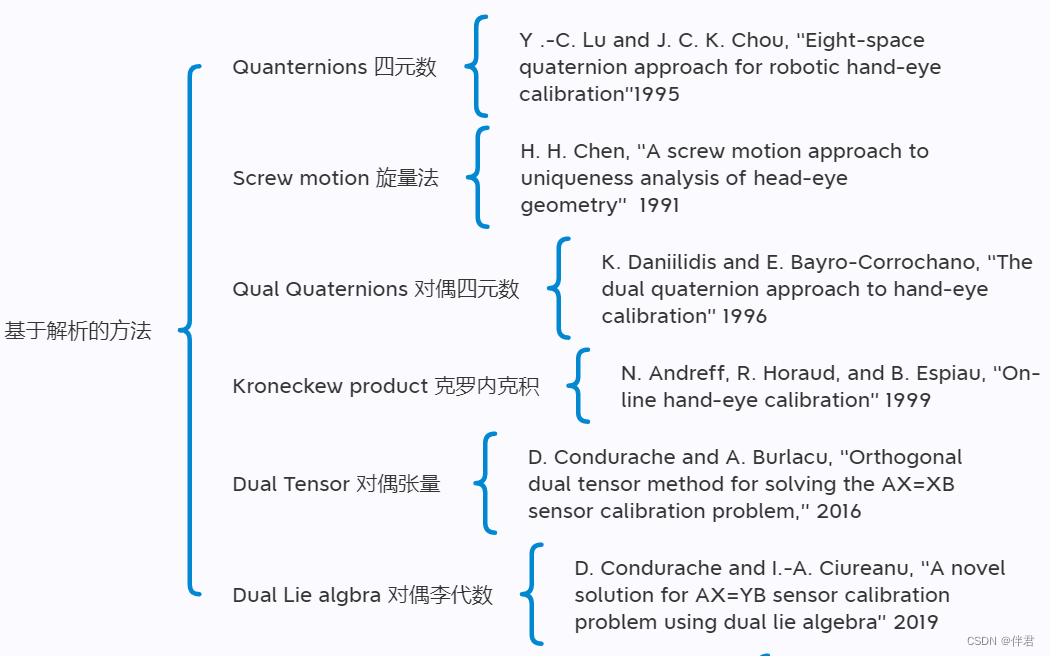

先来分别讲述一下三种方法的优缺点(计算时间和精度仅供参考): 方法主要有轴-角法、李代数、克罗内可积以及四元数法,具体如下图所示:

方法主要有轴-角法、李代数、克罗内可积以及四元数法,具体如下图所示:  旋转和平移参数同时解决 旋转和平移参数同时解决的方法原理具体如下:

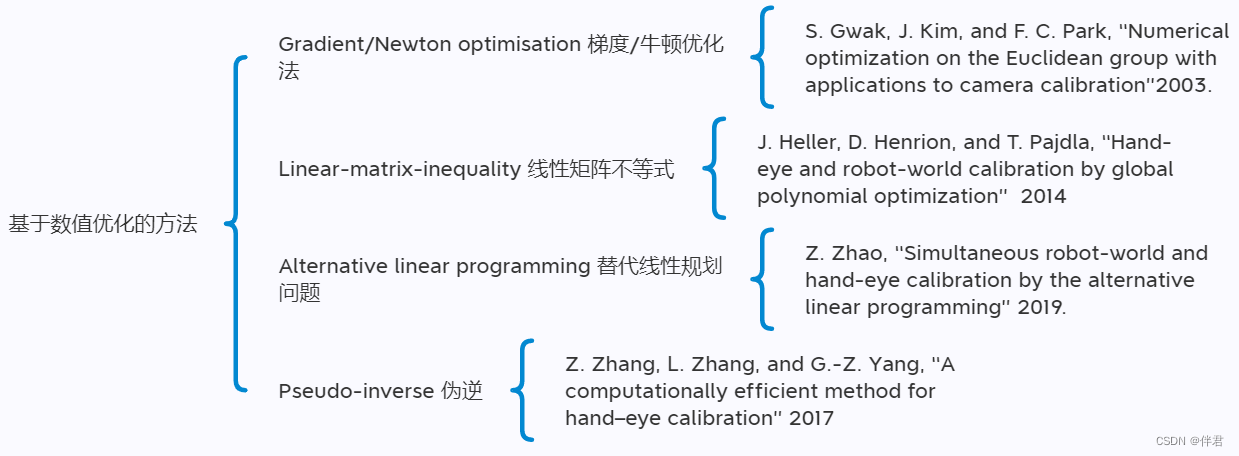

旋转和平移参数同时解决 旋转和平移参数同时解决的方法原理具体如下:  旋转和平移参数同时解决的方法具体又可以分为基于解析的方法以及基于数值优化的方法:

旋转和平移参数同时解决的方法具体又可以分为基于解析的方法以及基于数值优化的方法:

AX=XB齐次方程求解方法对比分析

AX=XB齐次方程求解方法对比分析

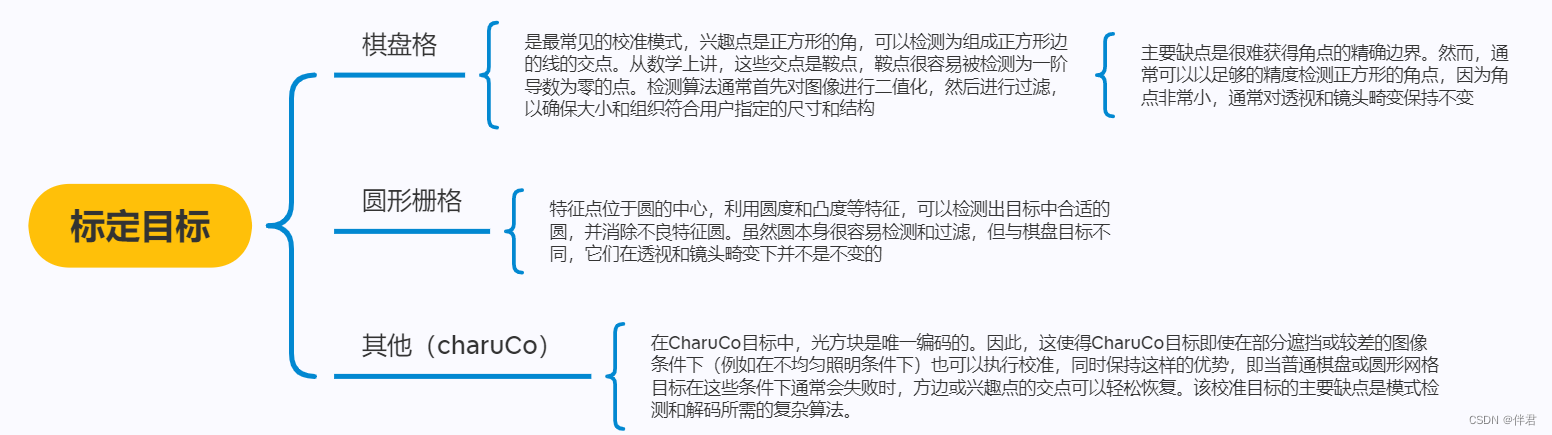

三种标定目标的特点如下所示:

三种标定目标的特点如下所示:  既然说到了这,那就谈一下传感器为其他类型所用到的标定物,以线激光轮廓传感器为例,它所常用的标定目标为标准球,基于球形标定物的标定算法该算法通过线激光传感器扫描球形标定物,以标准球的球心作为标定点,控制机器人带动线激光传感器多位姿扫描标准球,再根据球形标定物的几何特性和勾股定理求取标准球心在线激光传感器坐标系下的坐标。当然了,也有以阶梯形、圆柱作为标定物来进行线激光轮廓传感器的手眼标定,这时用到的手眼关系矩阵可与前面所述的不同,不过都是基于标定物的几何特性建立起手眼关系矩阵。

既然说到了这,那就谈一下传感器为其他类型所用到的标定物,以线激光轮廓传感器为例,它所常用的标定目标为标准球,基于球形标定物的标定算法该算法通过线激光传感器扫描球形标定物,以标准球的球心作为标定点,控制机器人带动线激光传感器多位姿扫描标准球,再根据球形标定物的几何特性和勾股定理求取标准球心在线激光传感器坐标系下的坐标。当然了,也有以阶梯形、圆柱作为标定物来进行线激光轮廓传感器的手眼标定,这时用到的手眼关系矩阵可与前面所述的不同,不过都是基于标定物的几何特性建立起手眼关系矩阵。