| Docker搭建启动Hadoop3.3.0集群 | 您所在的位置:网站首页 › 启动hadoop集群使用命令 › Docker搭建启动Hadoop3.3.0集群 |

Docker搭建启动Hadoop3.3.0集群

|

Hadoop配置

Docker

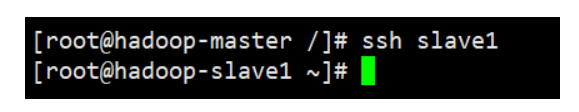

安装docker $ yum install docker设置开机自启Docker $ systemctl enable docker启动Docker $ systemctl start docker查看docker版本 [root@VM-16-13-centos ~]$ docker -v "Docker version 1.13.1, build 0be3e21/1.13.1"docker 配置阿里云镜像加速 sudo mkdir -p /etc/docker sudo tee /etc/docker/daemon.json yarn.nodemanager.aux-services mapreduce_shuffle yarn.nodemanager.auxservices.mapreduce.shuffle.class org.apache.hadoop.mapred.ShuffleHandler yarn.resourcemanager.address master:8032 yarn.resourcemanager.scheduler.address master:8030 yarn.resourcemanager.resource-tracker.address master:8031 yarn.resourcemanager.admin.address master:8033 yarn.resourcemanager.webapp.address master:8088 修改 slaves,3.0版本为workers slave1 slvae2 修改 hadoop-env.sh添加如下内容: export HDFS_NAMENODE_USER=root export HDFS_DATANODE_USER=root export HDFS_SECONDARYNAMENODE_USER=root export YARN_RESOURCEMANAGER_USER=root export YARN_NODEMANAGER_USER=root export JAVA_HOME=/usr/local/jdk 将文件上传到宿主机,并使用docker cp将文件更新到容器中 $ docker cp ~/hadoop test-master:/usr/local/hadoop/etc 构建镜像将正在运行的test-master容器提交为镜像 $ docker commit test-master centos-hadoop $ docker stop test-master test-slave1 test-slave2 # 终止运行的容器 Hadoop集群配置启动 $ docker run --name hadoop-master --hostname hadoop-master -d -P -p 50070:50070 -p 8088:8088 -p 9001:9001 -p 8030:8030 -p 8031:8031 -p 8032:8032 centos-hadoop $ docker run --name hadoop-slave1 --hostname hadoop-slave1 -d -P centos-hadoop $ docker run --name hadoop-slave2 --hostname hadoop-slave2 -d -P centos-hadoop同样打开3个终端进入到启动的3个容器中 $ docker exec -it hadoop-master bash $ docker exec -it hadoop-slave1 bash $ docker exec -it hadoop-slave2 bash在各个容器的内部执行 vim /etc/hosts查看每个容器的ip 并为每个容器配置下列内容 172.18.0.2 master 172.18.0.3 slave1 172.18.0.4 slave2配置ssh 免密登录 在每个容器内部执行下列命令 $ ssh-keygen (执行后会有多个输入提示,不用输入任何内容,全部直接回车即可) $ ssh-copy-id -i /root/.ssh/id_rsa -p 22 root@master $ ssh-copy-id -i /root/.ssh/id_rsa -p 22 root@slave1 $ ssh-copy-id -i /root/.ssh/id_rsa -p 22 root@slave2每个容器执行 ssh-keygen命令 [root@hadoop-master /]# ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Created directory '/root/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:0gySLsZy/97FMoRM1DBZLwdRC+14P1NKouLklyICsag root@hadoop-master The key's randomart image is: +---[RSA 3072]----+ | +==+. | | o...+.. | | o o .o+ | |.. . + =.o+ . . | |oo= . + So + o | |++ o oo.. = | |.. .+ .o.o o | |E . ..+.o+ | | . ooo. | +----[SHA256]-----+ [root@hadoop-master /]#每个容器配置免密登录,其中会输入root用户的密码 成功执行后,应该可以免密码登录到其他节点,这里以master节点ssh登录slave1节点为例 在master节点中进入到 HADOOP_HOME目录中 $ cd $HADOOP_HOME进入其中的sbin目录中 执行下列命令: $ hdfs namenode -format[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-fC6KDkYy-1609740768276)(C:\Users\ASUS\AppData\Roaming\Typora\typora-user-images\image-20210104123139156.png)] $ hdfs --daemon start namenode $ hdfs --daemon start datanode最后执行启动命令 $ ./start-all.sh # 启动命令 $ ./stop-all.sh # 终止命令启动成功 [root@hadoop-master sbin]# ./start-all.sh Starting namenodes on [master] master: namenode is running as process 343. Stop it first. Starting datanodes Last login: Mon Jan 4 04:31:52 UTC 2021 slave2: WARNING: /usr/local/hadoop/logs does not exist. Creating. slave1: WARNING: /usr/local/hadoop/logs does not exist. Creating. Starting secondary namenodes [master] Last login: Mon Jan 4 04:31:52 UTC 2021 Starting resourcemanager Last login: Mon Jan 4 04:32:00 UTC 2021 Starting nodemanagers Last login: Mon Jan 4 04:32:11 UTC 2021 [root@hadoop-master sbin]# jps 1344 Jps 448 DataNode 343 NameNode 925 SecondaryNameNode 1183 ResourceManager [root@hadoop-master sbin]# ############################# [root@hadoop-slave2 /]# jps 296 DataNode 506 Jps 412 NodeManager [root@hadoop-slave2 /]# ########################## [root@hadoop-slave1 /]# jps 448 NodeManager 332 DataNode 541 Jps [root@hadoop-slave1 /]#

|

【本文地址】

公司简介

联系我们