| python爬虫 | 您所在的位置:网站首页 › 优酷视频电影怎么评分的 › python爬虫 |

python爬虫

|

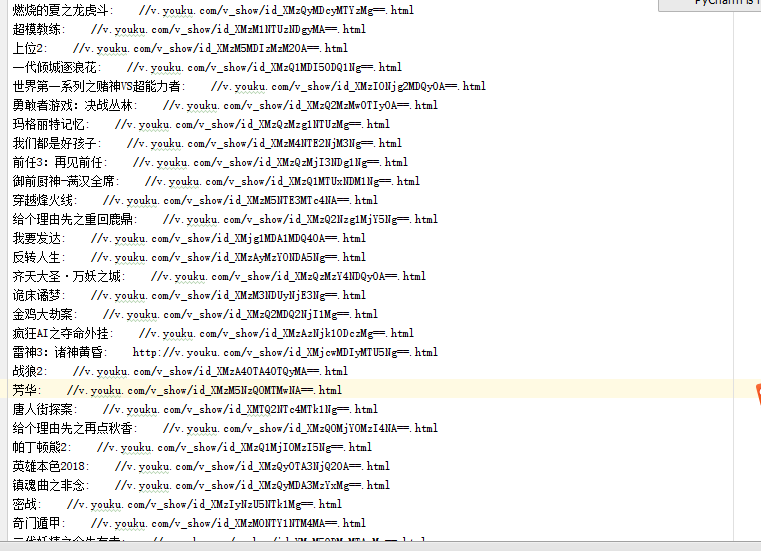

最近在学习爬虫,用的BeautifulSoup4这个库,设想是把优酷上面的电影的名字及链接爬到,然后存到一个文本文档中。比较简单的需求,第一次写爬虫。贴上代码供参考: 1 # coding:utf-8 2 3 import requests 4 import os 5 from bs4 import BeautifulSoup 6 import re 7 import time 8 9 '''抓优酷网站的电影:http://www.youku.com/ ''' 10 11 url = "http://list.youku.com/category/show/c_96_s_1_d_1_u_1.html" 12 h = {"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:58.0) Gecko/20100101 Firefox/58.0"} 13 14 15 16 17 #存到movie文件夹的文本文件中 18 def write_movie(): 19 currentPath = os.path.dirname(os.path.realpath(__file__)) 20 #print(currentPath) 21 moviePath = currentPath + "\\" + "movie"+"\\" + "youku_movie_address.text" 22 #print(moviePath) 23 fp = open(moviePath ,encoding="utf-8",mode="a") 24 25 for x in list_a: 26 text = x.get_text() 27 if text == "": 28 try: 29 fp.write(x["title"] + ": " + x["href"]+"\n") 30 except IOError as msg: 31 print(msg) 32 33 fp.write("-------------------------------over-----------------------------" + "\n") 34 fp.close() 35 36 #第一页 37 res = requests.get(url,headers = h) 38 print(res.url) 39 soup = BeautifulSoup(res.content,'html.parser') 40 list_a = soup.find_all(href = re.compile("==.html"),target="_blank") 41 write_movie() 42 43 for num in range(2,1000): 44 45 #获取“下一页”的href属性 46 fanye_a = soup.find(charset="-4-1-999" ) 47 fanye_href = fanye_a["href"] 48 print(fanye_href) 49 #请求页面 50 ee = requests.get("http:" + fanye_href,headers = h) 51 time.sleep(3) 52 print(ee.url) 53 54 soup = BeautifulSoup(ee.content,'html.parser') 55 list_a = soup.find_all(href = re.compile("==.html"),target="_blank") 56 57 #调用写入的方法 58 write_movie() 59 time.sleep(6)运行后的txt内的文本内容:

|

【本文地址】

公司简介

联系我们