| sqoop job 实现自动增量导入 | 您所在的位置:网站首页 › sqoop创建job › sqoop job 实现自动增量导入 |

sqoop job 实现自动增量导入

二、普通增量导入

# 这个问题在于我们每次再增量导入的时候就要手动去更改--last-value \的值。

# 否则就每次都是全量导入。显得不灵活sqoop import --connect jdbc:mysql://172.16.100.173:3306/hdb \--username root --password oracletest \--table autoextend \-m 1 \--incremental append \--check-column id \--last-value 11 \--fields-terminated-by '\t' \--hive-import --hive-database hdb --hive-table autoextend

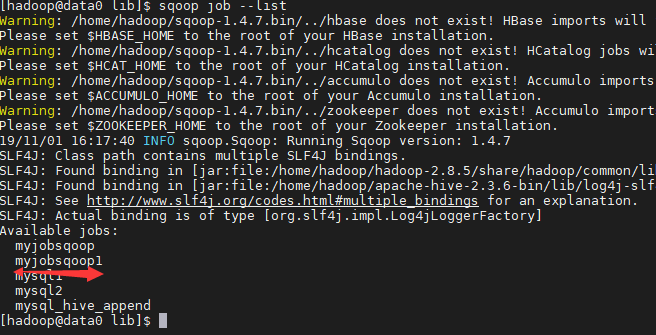

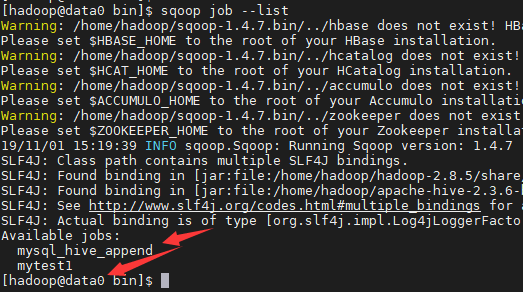

三、sqoop job增量导入 1、sqoop job 参数 Job management arguments: --create Create a new saved job --delete Delete a saved job --exec Run a saved job --help Print usage instructions --list List saved jobs --meta-connect Specify JDBC connect string for the metastore --show Show the parameters for a saved job --verbose Print more information while working 2、查看已经存在的job sqoop job --list  3、删除sqoop job

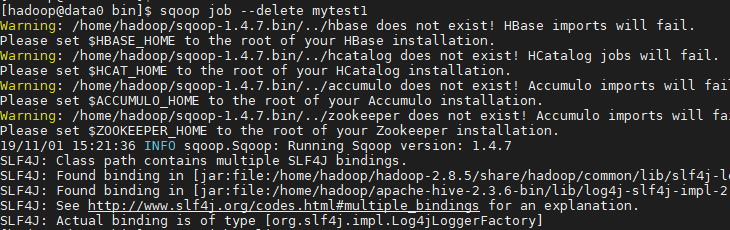

3、删除sqoop job

sqoop job --delete mytest1

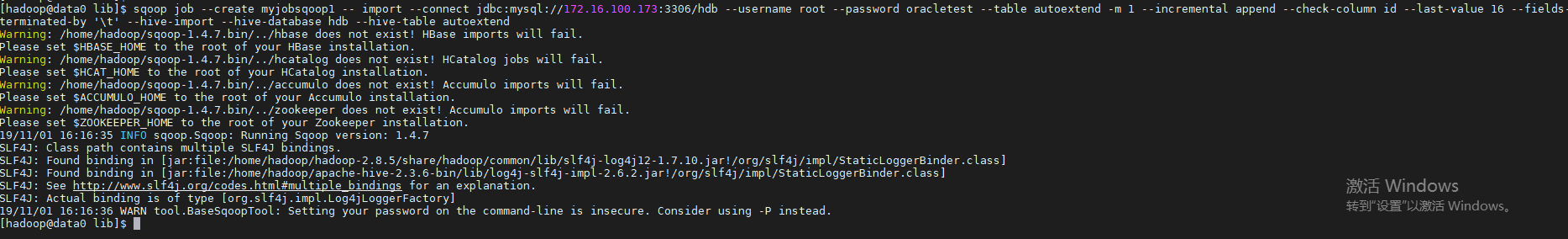

sqoop job每次会为我们维护last-value的值,达到自动增量导入的目的 sqoop job --create myjobsqoop -- import --connect jdbc:mysql://172.16.100.173:3306/hdb --username root --password oracletest --table autoextend -m 1 --incremental append --check-column id --last-value 16 --fields-terminated-by '\t' --hive-import --hive-database hdb --hive-table autoextend

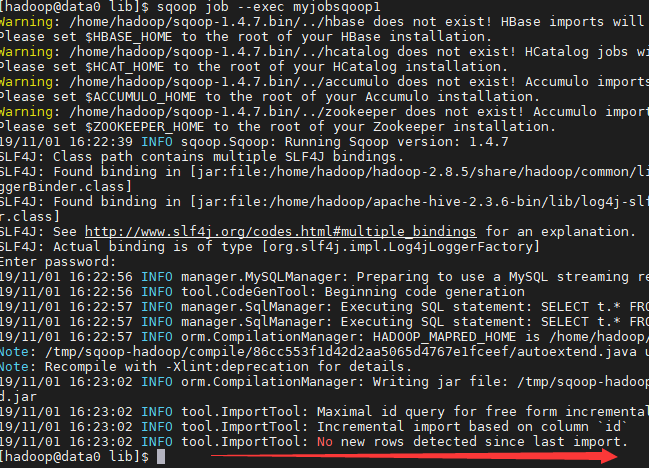

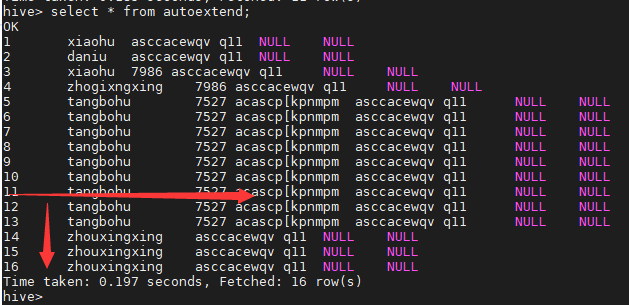

查看job 1)无新数据运行 sqoop job --exec myjobsqoop1

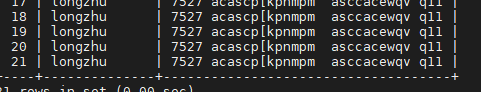

2)有新数据 MySQL新写入

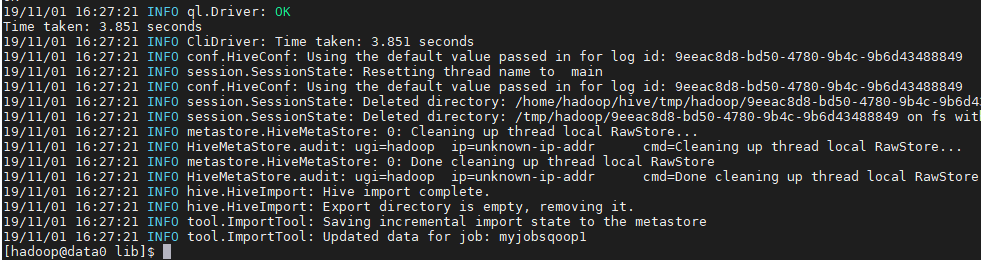

运行sqoop job --exec myjobsqoop1

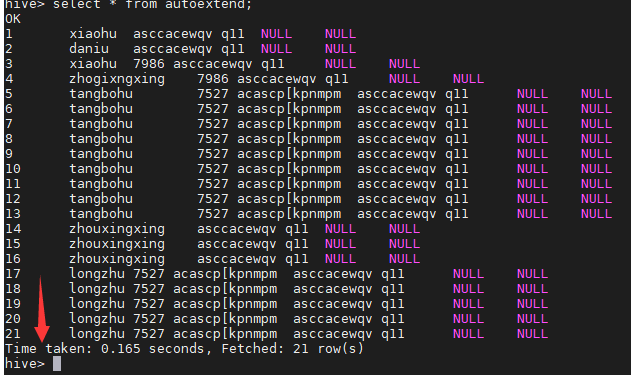

查看hive表

转载内容: 创建job ## -- import 中间有个空格 bin/sqoop job --create mysql_hive_append -- import --connect jdbc:mysql://hadoop001:3306/learn \ --username root --password 123456 \ --table user \ -m 1 \ --incremental append \ --check-column user_id \ --last-value 0 \ --fields-terminated-by ',' \ --hive-import \ --hive-table zzy.test3sqoop.Sqoop: Got exception running Sqoop:java.lang.NullPointerException,没遇到可以跳过 19/09/20 09:57:47 ERROR sqoop.Sqoop: Got exception running Sqoop: java.lang.NullPointerException at org.json.JSONObject.(JSONObject.java:144) ## 缺少的东西 at org.apache.sqoop.util.SqoopJsonUtil.getJsonStringforMap(SqoopJsonUtil.java:43) at org.apache.sqoop.SqoopOptions.writeProperties(SqoopOptions.java:785) at org.apache.sqoop.metastore.hsqldb.HsqldbJobStorage.createInternal(HsqldbJobStorage.java:399) at org.apache.sqoop.metastore.hsqldb.HsqldbJobStorage.create(HsqldbJobStorage.java:379) at org.apache.sqoop.tool.JobTool.createJob(JobTool.java:181) at org.apache.sqoop.tool.JobTool.run(JobTool.java:294) at org.apache.sqoop.Sqoop.run(Sqoop.java:147) at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70) at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183) at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234) at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243) at org.apache.sqoop.Sqoop.main(Sqoop.java:252)查了半天是缺少java-json.jar这么一个jar包。找了半天大部分CSDN都要钱。下面整理了一些可下载的地址。 下载地址(需要FQ) 百度网盘 如果还是报同样的错误可能还需要下面这些包百度网盘 运行job bin/sqoop job --exec mysql_hive_append我这里明明设置了密码。但是还是要求我再输入一次mysql的连接密码。暂时没解决,输入就是了。 [zzy@hadoop001 sqoop-1.4.7]$bin/sqoop job --exec mysql_hive_append 19/09/20 10:20:29 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/moudle/hadoop-2.7.2/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/moudle/hive-1.2.1/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] Enter password: 部分内容整理自:https://blog.csdn.net/weixin_43326165/article/details/101053116 |

【本文地址】

公司简介

联系我们